CZI funds new pipeline diversity effort

April 12, 2019

The Chan Zuckerberg Initiative recently announced that it was setting up a program intended to increase the recruitment and retention of underrepresented students in STEM. This will be launched as a partnership between the University of California San Diego and Berkeley campuses and the University of Maryland Baltimore County. UMBC has a Meyerhoff Scholars Program (launched in 1988) which focuses on undergraduate students that CZI intends to duplicate at UCB and UCSD.

There’s no indication of a pre-existing prototype at UCB, however the UCSD version will apparently leverage an existing program (PATHS) led by neurobiologist Professor Gentry Patrick.

Under the new CZI collaboration, announced at an April 9 press conference, UC San Diego, UC Berkeley and the University of Maryland, Baltimore County (UMBC) will work toward a goal of replicating aspects of UMBC’s Meyerhoff Scholars Program, recognized as one of the most effective models in the country to help inspire, recruit and retain underrepresented minorities pursuing undergraduate and graduate degrees in STEM fields. UMBC is a diverse public research university whose largest demographic groups identify as white and Asian, but which also graduates more African-American students who go on to earn dual M.D.-Ph.D. degrees than any other college in U.S.—a credit to the Meyerhoff program model.

I think this is great. Now, look, yes the Meyerhoff style program and this new CZI mimic are both pipeline solutions. And you know perfectly well, Dear Reader, that I am not a fan of pipeline excuses when it comes to the NIH grant award and University professor hiring strategies. Do not mistake me, however. I am still a fan of efforts that make it easier to extend fair opportunity for individuals from groups traditionally underrepresented in the academy, and in STEM fields in particular. I also have a slight brush of experience with what UMBC is doing in terms of encouraging URM students to seek out opportunity for research training. I conclude from this that they are doing REALLY great things in terms of culture on that campus. I would very much like to see that extended to other campuses and this CZI thing would seem to be doing that. Bravo.

So what might be the vision here? Well that all depends on how serious CZI is about this and how much money they have to spend. The Meyerhoff program has a Graduate Fellows wing to support and encourage graduate students. This would be the next step in the pipeline, of course, but hey why not? We’ve just reviewed a SfN program which was only able to be extended to 20% of URM applicants. I would imagine the total amount of URM graduate student support is also less than that needed for most applicants and therefore more graduate fellowships would be welcome. But what about REALLY moving the needle? What could CZI do?

The Science wing of CZI, headed by Cori Bargmann, is setting out to “cure, prevent, or manage all diseases“. Right up at the top of the splash page it talks about the People who “move the field forward“. They go on to say under their approach to supporting projects that they “believe that collaboration, risk taking, and staying close to the scientific community are our best opportunities to accelerate progress in science”. Risk taking. Risk taking. There is one thing that supports risk taking and that is significant and stable research funding. This is something that the Ginther report identified as a particular problem for PIs from some underrepresented groups. It is for certain sure a player in many people’s research program trajectories.

So I’m going to propose that CZI should set their sights on creating a version of what HHMI is doing with membership limited to PIs from underrepresented groups, broadly writ. It is up to them how they want to box this in, of course, but the basic principle would be to give stable research support to those who are less readily able to achieve that because of various biases in, e.g., NIH grant review and selection as well as in the good old boys club that is the HHMI.

The Society for Neuroscience Shouldn’t be Bragging About the Neuroscience Scholars Program

April 5, 2019

The Society for Neuroscience recently twittered a brag about it’s Neuroscience Scholars program.

It was the “38 years” part that got me. That is a long time. And we still do not have anything like robust participation of underrepresented* individuals in neuroscience. This suggests that particularly when it comes to “career growth” goals of this program, it is failing. I stepped over to the SfN page on the Program and was keen to see outcomes, aka, faculty. Nothing. Okay, let’s take peek at the PDF brochure reviewing the 30 year history of the program. I started tweeting bits in outrage and then….well, time to blog.

First off, the brochure says the program is funded by the NIH and has been from the outset “.. SfN has received strong support and funding from the NIH, starting in 1982 with funding from what was then the National Institute for Neurological and Communicative Disorders and Stroke (NINCDS). … with strong and enduring support from NIH, in particular NINDS, the NSP is recognized as one of the most successful diversity programs“. Oh? Has it accomplished much? Let’s peer into the report. “Since the first 8 participants who attended the 1981 and 1982 SfN annual meetings, the program has grown to support a total of 579 Scholars to date. During that time, the NSP has helped foster the careers of many successful researchers in neuroscience.” Well we all know foster the careers is nice pablum but it doesn’t say anything about actual accomplishment. And just so we are all nice and clear, SfN itself says this about the goal “The NSP’s current overall goal is to increase the likelihood that diverse trainees who enter the neuroscience field continue to advance in their careers — that is, fixing the “leaky pipeline.”” So yes. FACULTY.

[Sidebar: And I also think the funding by the NIH is plenty of justification for asking about grant success of any Scholars who became faculty, but I don’t see how to get at that. Related to this, I will just note that the Ginther report came out in 2011, 30 years after those “first 8 participants” attended the 1981 SfN meeting.]

Here’s what they have to offer on their survey to determine the outcomes from the first 30 years of the program. “The survey successfully reached 220 past Scholars (approximately 40 percent) and had a strong overall response rate (38 percent, n=84).” As I said on Twitter: ruh roh. Survivor bias bullshit warning alert…….. 84 out of 579 Scholars to date means they only reached 14.5% of their Scholars to determine their outcome. And they are pretty impressed with themselves. “Former Scholars have largely stayed within academia and achieved high standing, including full professorships and other faculty positions.” “Largely”. Nice weasel wording.

And more importantly, do you just maaaaaybe think this sample of respondents is highly frigging enriched in people who made it to professorial appointments and remain active neuroscientists? Again, this is out of the 38% responding of the 40% “reached”, aka 84/579 or 14.5% of all Scholars. And let’s just sum up the pie chart to assess their “largely” claim. I make it out to be that 50 of these scholars are in professorial appointments, this is only 8.6% of the total number of Scholars assisted over 30 years. Another 4 (0.7%) are listed separately as department heads. This does not seem to me to being a strong fix of the supposed leaky pipeline.

Now, as a reminder this is 8.6% out of an already highly selected subset of the most promising underrepresented burgeoning neuroscientists. The SfN brags about how highly competitive the program is “A record 102 applicants applied in 2010 for 20 coveted slots.” RIGHT? So the hugely leaky pipeline of Scholars reporting back for their program review purposes (38% responding of 40% “reached”) is only reflecting the leaking AFTER they’ve already had this 1/5 selection. What about the other 80%? Okay so let’s take their faculty plus department head numbers, multiply by the 0.2% selection factor from their applicant pool (don’t even get me started about those URM trainees who never even apply)…1.86%.

Less than 2%. That’s it?????? That sounds exactly like the terrible numbers of African American faculty in science departments to me. And note, the SFN says it’s program has since 1997 enrolled 48% Hispanic/LatinX and 35% Black/African-American Scholars. So we should be focusing on the total URM faculty numbers. I found another SfN report (pdf) showing there were 1% African-American and 5% Hispanic/Latinx faculty in US neuroscience PhD programs (2016).

This SfN program is doing NOTHING to fix the leaky pipeline problem from what their numbers are telling us.

___

*I shouldn’t have to point this out but African-Americans constitute about 12.7% of the US population, and Hispanic/Latinx about 17.8%.

A comment from pielcanelaphd on a prior post tips us off to a new report (PDF) from the General Accountability Office, described as a report to Congressional Committees.

The part of the report that deals with racial and ethnic disparities is mostly recitation of the supposed steps NIH has been taking in the wake of the Ginther report in 2011. But what is most important is the inclusion of Figure 2, an updated depiction of the funding rate disparity.

GAO-18-545:NIH RESEARCH Action Needed to Ensure Workforce Diversity Strategic Goals Are Achieved

These data are described mostly as the applicant funding rate or similar. The Ginther data focused on the success rate of applications from PIs of various groups. So if these data are by applicant PI and not by applications, there will be some small differences. Nevertheless, the point remains that things have not improved and PIs from underrepresented ethnic and racial groups experience a disparity relative to white PIs.

I’ve been seeing a few Twitter discussions that deal with a person wondering if their struggles in the academy are because of themselves (i.e., their personal merit/demerit axis) or because of their category (read: discrimination). This touches on the areas of established discrimination that we talk about around these parts, including recently the NIH grant fate of ESI applicants, women applicants and POC applicants.

In any of these cases, or the less grant-specific situations of adverse outcome in academia, it is impossible to determine on a case by case basis if the person is suffering from discrimination related to their category. I mean sure, if someone makes a very direct comment that they are marking down a specific manuscript, grant or recommendation only because the person is a woman, or of color or young then we can draw some conclusions. This never* happens. And we do all vary in our treatments/outcomes and in our merits that are intrinsic to ourselves. Sometimes outcomes are deserved, sometimes they vary by simple statistical chance and sometimes they are even better than deserved. So it is an unanswerable question, even if the chances are high that sometimes one is going to be treated poorly due to one’s membership in one of the categories against which discrimination has been proven.

These questions become something other than unanswerable when the person pondering them is doing “fine”.

“You are doing fine! Why would you complain about mistreatment, never mind wonder if it is some sort of discrimination you are suffering?”

I was also recently struck by a Tweeter comment about suffering a very current discrimination of some sort that came from a scientist who is by many measures “doing fine”.

Once, quite some time ago, I was on a seminar committee charged with selecting a year’s worth of speakers. We operated under a number of constraints, financial and topic-wise; I’m sure many of you have been on similar committees. I immediately noticed we weren’t selecting a gender balanced slate and started pushing explicitly for us to include more women. Everyone sort of ruefully agreed with me and admitted we need to do better. Including a nonzero number of female faculty on this panel, btw. We did try to do better. One of the people we invited one year was a not-super-senior person (one our supposed constraints was seniority) at the time with a less than huge reputation. We had her visit for seminar and it was good if perhaps not as broad as some of the ones from more-senior people. But it all seemed appropriate and fine. The post-seminar kvetching was instructive to me. Many folks liked it just fine but a few people complained about how it wasn’t up to snuff and we shouldn’t have invited her. I chalked it up to the lack of seniority, maybe a touch of sexism and let it go. I really didn’t even think twice about the fact that she’s also a person of color.

Many years later this woman is doing fine. Very well respected member of the field, with a strong history of contributions. Sustained funding track record. Trainee successes. A couple of job changes, society memberships, awards and whatnot that one might view as testimony to an establishment type of career. A person of substance.

This person went on to have the type of career and record of accomplishment that would have any casual outsider wondering how she could possibly complain about anything given that she’s done just fine and is doing just fine. Maybe even a little too fine, assuming she has critics of her science (which everyone does).

Well, clearly this person does complain, given the recent Twitt from her about some recent type of discrimination. She feels this discrimination. Should she? Is it really discrimination? After all, she’s doing fine.

Looping back up to the other conversations mentioned at the top, I’ll note that people bring this analysis into their self-doubt musings as well. A person who suffers some sort of adverse outcome might ask themselves why they are getting so angry. “Isn’t it me?”, they think, “Maybe I merited this outcome”. Why are they so angered about statistics or other established cases of discrimination against other women or POC? After all, they are doing fine.

And of course even more reliable than their internal dialog we hear the question from white men. Or whomever doesn’t happen to share the characteristics under discussion at the moment. There are going to be a lot of these folks that are of lesser status. Maybe they didn’t get that plum job at that plum university. Or had a more checkered funding history. Fewer highly productive collaborations, etc. They aren’t doing as “fine”. And so anyone who is doing better, and accomplishing more, clearly could not have ever suffered any discrimination personally. Even those people who admit that there is a bias against the class will look at this person who is doing fine and say “well, surely not you. You had a cushy ride and have nothing to complain about”.

I mused about the seminar anecdote because it is a fairly specific reminder to me that this person probably faced a lot of implicit discrimination through her career. Bias. Opposition. Neglect.

And this subtle antagonism surely did make it harder for her.

It surely did limit her accomplishments.

And now we have arrived. This is what is so hard to understand in these cases. Both in the self-reflection of self-doubt (imposter syndrome is a bear) and in the assessment of another person who is apparently doing fine.

They should be doing even better. Doing more, or doing what they have done more easily.

It took me a long while to really appreciate this**.

No matter how accomplished the woman or person of color might be at a given point of their career, they would have accomplished more if it were not for the headwind against which they always had to contend.

So no, they are not “doing fine”. And they do have a right to complain about discrimination.

__

*it does. but it is vanishingly rare in the context of all cases where someone might wonder if they were victim of some sort of discrimination.

**I think it is probably my thinking about how Generation X has been stifled in their careers relative to the generations above us that made this clearest to me. It’s not quite the same but it is related.

Racial Disparity in K99 Awards and R00 Transitions

July 19, 2018

Oh, what a shocker.

In the wake of the 2011 Ginther finding [see archives on Ginther if you have been living under a rock] that there was a significant racial bias in NIH grant review, the concrete response of the NIH was to blame the pipeline. Their only real dollar, funded initiatives were to attempt to get more African-American trainees into the science pipeline. The obvious subtext here was that the current PIs, against whom the grant review bias was defined, must be the problem, not the victim. Right? If you spend all your time insisting that since there were not red-fanged, white-hooded peer reviewers overtly proclaiming their hate for black people that peer review can’t be the problem, and you put your tepid money initiatives into scraping up more trainees of color, you are saying the current black PIs deserve their fate. Current example: NIGMS trying to transition more underrepresented individuals into faculty ranks, rather than funding the ones that already exist.

Well, we have some news. The Rescuing Biomedical Research blog has a new post up on Examining the distribution of K99/R00 awards by race authored by Chris Pickett.

It reviews success rates of K99 applicants from 2007-2017. Application PI demographics broke down to nearly 2/3 White, ~1/3 Asian, 2% multiracial and 2% black. Success rates: White, 31%, Multiracial, 30.7%, Asian, 26.7%, Black, 16.2%. Conversion to R00 phase rates: White, 80%, Multiracial, 77%, Asian, 76%, Black, 60%.

In terms of Hispanic ethnicity, 26.9% success for K99 and 77% conversion rate, neither significantly different from the nonHispanic rates.

Of course, seeing as how the RBR people are the VerySeriousPeople considering the future of biomedical careers (sorry Jeremy Berg but you hang with these people), the Discussion is the usual throwing up of hands and excuse making.

“The source of this bias is not clear…”. ” an analysis …could address”. “There are several potential explanations for these data”.

and of course

“put the onus on universities”

No. Heeeeeeyyyyyuuullll no. The onus is on the NIH. They are the ones with the problem.

And, as per usual, the fix is extraordinarily simple. As I repeatedly observe in the context of the Ginther finding, the NIH responded to a perception of a disparity in the funding of new investigators with immediate heavy handed top-down quota based affirmative action for many applications from ESI investigators. And now we have Round2 where they are inventing up new quota based affirmative action policies for the second round of funding for these self-same applicants. Note well: the statistical beneficiaries of ESI affirmative action polices are white investigators.

The number of K99 applications from black candidates was 154 over 10 years. 25 of these were funded. To bring this up to the success rate enjoyed by white applicants, the NIH need only have funded 23 more K99s. Across 28 Institutes and Centers. Across 10 years, aka 30 funding cycles. One more per IC per decade to fix the disparity. Fixing the Asian bias would be a little steeper, they’d need to fund another 97, let’s round that to 10 per year. Across all 28 ICs.

Now that they know about this, just as with Ginther, the fix is duck soup. The Director pulls each IC Director aside in quiet moment and says ‘fix this’. That’s it. That’s all that would be required. And the Directors just commit to pick up one more Asian application every year or so and one more black application every, checks notes, decade and this is fixed.

This is what makes the NIH response to all of this so damn disturbing. It’s rounding error. They pick up grants all the time for reasons way more biased and disturbing than this. Saving a BSD lab that allegedly ran out of funding. Handing out under the table Administrative Supplements for gawd knows what random purpose. Prioritizing the F32 applications from some labs over others. Ditto the K99 apps.

They just need to apply their usual set of glad handing biases to redress this systematic problem with the review and funding of people of color.

And they steadfastly refuse to do so.

For this one specific area of declared Programmatic interest.

When they pick up many, many more grants out of order of review for all their other varied Programmatic interests.

You* have to wonder why.

__

h/t @biochembelle

*and those people you are trying to lure into the pipeline, NIH? They are also wondering why they should join a rigged game like this one.

A bit in Science authored by Jocelyn Kaiser recently covered the preprint posted by Forscher and colleagues which describes a study of bias NIH grant review. I was struck by a response Kaiser obtained from one of the authors on the question of range restriction.

Some have also questioned Devine’s decision to use only funded proposals, saying it fails to explore whether reviewers might show bias when judging lower quality proposals. But she and Forscher point out that half of the 48 proposals were initial submissions that were relatively weak in quality and only received funding after revisions, including four that were of too low quality to be scored.

They really don’t seem to understand NIH grant review where about half of all proposals are “too low quality to be scored”. Their inclusion of only 8% ND applications simply doesn’t cut it. Thinking about this, however, motivated me to go back to the preprint, follow some links to associated data and download the excel file with the original grant scores listed.

I do still think they are missing a key point about restriction of range. It isn’t, much as they would like to think, only about the score. The score on a given round is a value with considerable error, as the group itself described in a prior publication in which the same grant reviewed in different ersatz study sections ended up with a different score. If there is a central tendency for true grant score, which we might approach with dozens of reviews of the same application, then sometimes any given score is going to be too good, and sometimes too bad, as an estimate of the central tendency. Which means that on a second review, the score for the former are going to tend to get worse and the scores for the latter are going to tend to get better. The authors only selected the ones that tended to get better for inclusion (i.e., the ones that reached funding on revision).

Anther way of getting at this is to imagine two grants which get the same score in a given review round. One is kinda meh, with mostly reasonable approaches and methods from a pretty good PI with a decent reputation. The other grant is really exciting, but with some ill considered methodological flaws and a missing bit of preliminary data. Each one comes back in revision with the former merely shined up a bit and the latter with awesome new preliminary data and the methods fixed. The meh one goes backward (enraging the PI who “did everything the panel requested”) and the exciting one is now in the fundable range.

The authors have made the mistake of thinking that grants that are discussed, but get the same score well outside the range of funding, are the same in terms of true quality. I would argue that the fact that the “low quality” ones they used were revisable into the fundable range makes them different from the similar scoring applications that did not eventually win funding.

In thinking about this, I came to realize another key bit of positive control data that the authors could provide to enhance our confidence in their study. I scanned through the preprint again and was unable to find any mention of them comparing the original scores of the proposals with the values that came out of their study. Was there a tight correlation? Was it equivalently tight across all of their PI name manipulations? To what extent did the new scores confirm the original funded, low quality and ND outcomes?

This would be key to at least partially counter my points about the range of applications that were included in this study. If the test reviewer subjects found the best original scored grants to be top quality, and the worst to be the worst, independent of PI name then this might help to reassure us that the true quality range within the discussed half was reasonably represented. If, however, the test subjects often reviewed the original top grants lower and the lower grants higher, this would reinforce my contention that the range of the central tendencies for the quality of the grant applications was narrow.

So how about it, Forscher et al? How about showing us the scores from your experiment for each application by PI designation along with the original scores?

__

Patrick Forscher William Cox Markus Brauer Patricia Devine, No race or gender bias in a randomized experiment of NIH R01 grant reviews. Created on: May 25, 2018 | Last edited: May 25, 2018; posted on PsyArXiv

I recently discussed some of the problems with a new pre-print by Forscher and colleagues describing a study which purports to evaluate bias in the peer review of NIH grants.

One thing that I figured out today is that the team that is funded under the grant which supported the Forscher et al study also produced a prior paper that I already discussed. That prior discussion focused on the use of only funded grants to evaluate peer review behavior, and the corresponding problems of a restricted range. The conclusion of this prior paper was that reviewers didn’t agree with each other in the evaluation of the same grant. This, in retrospect, also seems to be a design that was intended to fail. In that instance designed to fail to find correspondence between reviewers, just as the Forscher study seems constructed to fail to find evidence of bias.

I am working up a real distaste for the “Transformative” research project (R01 GM111002; 9/2013-6/2018) funded to PIs M. Carnes and P. Devine that is titled EXPLORING THE SCIENCE OF SCIENTIFIC REVIEW. This project is funded to the tune of $465,804 in direct costs in the final year and reached as high as $614,398 direct in year 3. We can, I think, fairly demand a high standard for the resulting science. I do not think this team is meeting a high standard.

One of the papers (Pier et al 2017) produced by this project discusses the role of study section discussion in revising/calibrating initial scoring.

Results suggest that although reviewers within a single panel agree more following collaborative discussion, different panels agree less after discussion, and Score Calibration Talk plays a pivotal role in scoring variability during peer review.

So they know. They know that scores change through discussion and they know that a given set of applications can go in somewhat different directions based on who is reviewing. They know that scores can change depending on what other ersatz panel members are included and perhaps depending on how the total number of grants are distributed to reviewers in those panels. The study described in the Forscher pre-print did not convene panels:

Reviewers were told we would schedule a conference call to discuss the proposals with other reviewers. No conference call would actually occur; we informed the prospective reviewers of this call to better match the actual NIH review process.

Brauer is an overlapping co-author. The senior author on the Forscher study is Co-PI, along with the senior author of the Pier et al. papers, on the grant that funds this work. The Pier et al 2017 Res Eval paper shows that they know full well that study section discussion is necessary to “better match the actual NIH review process”. Their paper shows that study section discussion does so in part by getting better agreement on the merits of a particular proposal across the individuals doing the reviewing (within a given panel). By extension, not including any study section type discussion is guaranteed to result in a more variable assessment. To throw noise into the data. Which has a tendency to make it more likely that a study will arrive at a null result, as the Forscher et al study did.

These investigators also know that the grant load for NIH reviewers is not typically three applications, as was used in the study described in the Forscher pre-print. From Pier et al 2017 again:

We further learned that although a reviewer may be assigned 9–10 applications for a standing study section, ad hoc panels or SEPs can receive assignments as low as 5–6 applications; thus, the SRO assigned each reviewer to evaluate six applications based on their scientific expertise, as we believed a reviewer load on the low end of what is typical would increase the likelihood of study participation.

I believe that the reviewer load is critically important if you are trying to mimic the way scores are decided by the NIH review process. The reason is that while several NIH documents and reviewer guides pay lipservice to the idea that the review of each grant proposal is objective, the simple truth is that review is comparative.

Grant applications are scored on a 1-9 scale with descriptors ranging from Exceptional (1) to Very Good (4) to Poor (9). On an objective basis, I and many other experienced NIH grant reviewers argue, the distribution of NIH grant applications (all of them) is not flat. There is a very large peak around the Excellent to Very Good (i.e., 3-4) range, in my humble estimation. And if you are familiar with review you will know that there is a pronounced tendency of reviewers, unchecked, to stack their reviews around this range. They do it within reviewer and they do it as a panel. This is why the SRO (and Chair, occasionally) spends so much time before the meeting exhorting the panel members to spread their scores. To flatten the objective distribution of merit into a more linear set of scores. To, in essence, let a competitive ranking procedure sneak into this supposedly objective and non-comparative process.

Many experienced reviewers understand why this is being asked of them, endorse it as necessary (at the least) and can do a fair job of score spreading*.

The fewer grants a reviewer has on the immediate assignment pile, the less distance there need be across this pile. If you have only three grants and score them 2, 3 and 4, well hey, scores spread. If, however, you have a pile of 6 grants and score them 2, 3, 3, 3, 4, 4 (which is very likely the objective distribution) then you are quite obviously not spreading your scores enough. So what to do? Well, for some reason actual NIH grant reviewers are really loathe to throw down a 1. So 2 is the top mark. Gotta spread the rest. Ok, how about 2, 3, 3…er 4 I mean. Then 4, 4…shit. 4, 5 and oh 6 seems really mean so another 5. Ok. 2, 3, 4, 4, 5, 5. phew. Scores spread, particularly around the key window that is going to make the SRO go ballistic.

Wait, what’s that? Why are reviewers working so hard around the 2-4 zone and care less about 5+? Well, surprise surprise that is the place** where it gets serious between probably fund, maybe fund and no way, no how fund. And reviewers are pretty sensitive to that**, even if they do not know precisely what score will mean funded / not funded for any specific application.

That little spreading exercise was for a six grant load. Now imagine throwing three more applications into this mix for the more typical reviewer load.

For today, it is not important to discuss how a reviewer decides one grant comes before the other or that perhaps two grants really do deserve the same score. The point is that grants are assessed against each other. In the individual reviewer’s stack and to some extent across the entire study section. And it matters how many applications the reviewer has to review. This affects that reviewer’s pre-discussion calibration of scores.

Read phase, after the initial scores are nominated and before the study section meets, is another place where re-calibration of scores happens. (I’m not sure if they included that part in the Pier et al studies, it isn’t explicitly mentioned so presumably not?)

If the Forscher study only gave reviewers three grants to review, and did not do the usual exhortation to spread scores, this is a serious flaw. Another serious and I would say fatal flaw in the design. The tendency of real reviewers is to score more compactly. This is, presumably, enhanced by the selection of grants that were funded (either on the version that used or in revision) which we might think would at least cut off the tail of really bad proposals. The ranges will be from 2-4*** instead of 2-5 or 6. Of course this will obscure differences between grants, making it much much more likely that no effect of sex or ethnicity (the subject of the Forscher et al study) of the PI would emerge.

__

Elizabeth L. Pier, Markus Brauer, Amarette Filut, Anna Kaatz, Joshua Raclaw, Mitchell J. Nathan, Cecilia E. Ford and Molly Carnes, Low agreement among reviewers evaluating the same NIH grant applications. 2018, PNAS: published ahead of print March 5, 2018, https://doi.org/10.1073/pnas.1714379115

Elizabeth L. Pier, Joshua Raclaw, Anna Kaatz, Markus Brauer,Molly Carnes, Mitchell J. Nathan and Cecilia E. Ford. ‘Your comments are meaner than your score’: score calibration talk influences intra- and inter-panel variability during scientific grant peer review, Res Eval. 2017 Jan; 26(1): 1–14. Published online 2017 Feb 14. doi: 10.1093/reseval/rvw025

Patrick Forscher, William Cox, Markus Brauer, and Patricia Devine. No race or gender bias in a randomized experiment of NIH R01 grant reviews. Created on: May 25, 2018 | Last edited: May 25, 2018 https://psyarxiv.com/r2xvb/

*I have related before that when YHN was empanled on a study section he practiced a radical version of score spreading. Initial initial scores for his pile were tagged to the extreme ends of the permissible scores (this was under the old system) and even intervals within that were used to place the grants in his pile.

**as are SROs. I cannot imagine a SRO ever getting on your case to spread scores for a pile that comes in at 2, 3, 4, 5, 7, 7, 7, 7, 7.

***Study sections vary a lot in their precise calibration of where the hot zone is and how far apart scores are spread. This is why the more important funding criterion is the percentile, which attempts to adjust for such study section differences. This is the long way of saying I’m not encouraging comments naggling over these specific examples. The point should stand regardless of your pet study sections’ calibration points.

NIH Ginther Fail: A transformative research project

May 29, 2018

In August of 2011 the Ginther et al. paper published in Science let us know that African-American PIs were disadvantaged in the competition for NIH awards. There was an overall success rate disparity identified as well as a related necessity of funded PIs to revise their proposals more frequently to become funded.

Both of these have significant consequences for what science gets done and how careers unfold.

I have been very unhappy with the NIH response to this finding.

I have recently become aware of a “Transformative” research project (R01 GM111002; 9/2013-6/2018) funded to PIs M. Carnes and P. Devine that is titled EXPLORING THE SCIENCE OF SCIENTIFIC REVIEW. From the description/abstract:

Unexplained disparities in R01 funding outcomes by race and gender have raised concern about bias in NIH peer review. This Transformative R01 will examine if and how implicit (i.e., unintentional) bias might occur in R01 peer review… Specific Aim #2. Determine whether investigator race, gender, or institution causally influences the review of identical proposals. We will conduct a randomized, controlled study in which we manipulate characteristics of a grant principal investigator (PI) to assess their influence on grant review outcomes…The potential impact is threefold; this research will 1) discover whether certain forms of cognitive bias are or are not consequential in R01 peer review… the results of our research could set the stage for transformation in peer review throughout NIH.

It could not be any clearer that this project is a direct NIH response to the Ginther result. So it is fully and completely appropriate to view any resulting studies in this context. (Just to get this out of the way.)

I became aware of this study through a Twitter mention of a pre-print that has been posted on PsyArXiv. The version I have read is:

No race or gender bias in a randomized experiment of NIH R01 grant reviews. Patrick Forscher William Cox Markus Brauer Patricia Devine Created on: May 25, 2018 | Last edited: May 25, 2018

The senior author is one of the Multi-PI on the aforementioned funded research project and the pre-print makes this even clearer with a statement.

Funding: This research was supported by 5R01GM111002-02, awarded to the last author.

So while yes, the NIH does not dictate the conduct of research under awards that it makes, this effort can be fairly considered part of the NIH response to Ginther. As you can see from comparing the abstract of the funded grant to the pre-print study there is every reason to assume the nature of the study as conducted was actually spelled out in some detail in the grant proposal. Which the NIH selected for funding, apparently with some extra consideration*.

There are many, many, many things wrong with the study as depicted in the pre-print. It is going to take me more than one blog post to get through them all. So consider none of these to be complete. I may also repeat myself on certain aspects.

First up today is the part of the experimental design that was intended to create the impression in the minds of the reviewers that a given application had a PI of certain key characteristics, namely on the spectra of sex (male versus female) and ethnicity (African-American versus Irish-American). This, I will note, is a tried and true design feature for some very useful prior findings. Change the author names to initials and you can reduce apparent sex-based bias in the review of papers. Change the author names to African-American sounding ones and you can change the opinion of the quality of legal briefs. Change sex, apparent ethnicity of the name on job resumes and you can change the proportion called for further interviewing. Etc. You know the literature. I am not objecting to the approach, it is a good one, but I am objecting to its application to NIH grant review and the way they applied it.

The problem with application of this to NIH Grant review is that the Investigator(s) is such a key component of review. It is one of five allegedly co-equal review criteria and the grant proposals include a specific document (Biosketch) which is very detailed about a specific individual and their contributions to science. This differs tremendously from the job of evaluating a legal brief. It varies tremendously from reviewing a large stack of resumes submitted in response to a fairly generic job. It even differs from the job of reviewing a manuscript submitted for potential publication. NIH grant review specifically demands an assessment of the PI in question.

What this means is that it is really difficult to fake the PI and have success in your design. Success absolutely requires that the reviewers who are the subjects in the study both fail to detect the deception and genuinely develop a belief that the PI has the characteristics intended by the manipulation (i.e., man versus woman and black versus white). The authors recognized this, as we see from page 4 of the pre-print:

To avoid arousing suspicion as to the purpose of the study, no reviewer was asked to evaluate more than one proposal written by a non-White-male PI.

They understand that suspicion as to the purpose of the study is deadly to the outcome.

So how did they attempt to manipulate the reviewer’s percept of the PI?

Selecting names that connote identities. We manipulated PI identity by assigning proposals names from which race and sex can be inferred 11,12. We chose the names by consulting tables compiled by Bertrand and Mullainathan 11. Bertrand and Mullainathan compiled the male and female first names that were most commonly associated with Black and White babies born in Massachusetts between 1974 and 1979. A person born in the 1970s would now be in their 40s, which we reasoned was a plausible age for a current Principal Investigator. Bertrand and Mullainathan also asked 30 people to categorize the names as “White”, “African American”, “Other”, or “Cannot tell”. We selected first names from their project that were both associated with and perceived as the race in question (i.e., >60 odds of being associated with the race in question; categorized as the race in question more than 90% of the time). We selected six White male first names (Matthew, Greg, Jay, Brett, Todd, Brad) and three first names for each of the White female (Anne, Laurie, Kristin), Black male (Darnell, Jamal, Tyrone), and Black female (Latoya, Tanisha, Latonya) categories. We also chose nine White last names (Walsh, Baker, Murray, Murphy, O’Brian, McCarthy, Kelly, Ryan, Sullivan) and three Black last names (Jackson, Robinson, Washington) from Bertrand and Mullainathan’s lists. Our grant proposals spanned 12 specific areas of science; each of the 12 scientific topic areas shared a common set of White male, White female, Black male, and Black female names. First names and last names were paired together pseudo-randomly, with the constraints that (1) any given combination of first and last names never occurred more than twice across the 12 scientific topic areas used for the study, and (2) the combination did not duplicate the name of a famous person (i.e., “Latoya Jackson” never appeared as a PI name).

So basically the equivalent of blackface. They selected some highly stereotypical “black” first names and some “white” surnames which are almost all Irish (hence my comment above about Irish-American ethnicity instead of Caucasian-American. This also needs some exploring.).

Sorry, but for me this heightens concern that reviewers deduce what they are up to. Right? Each reviewer had only three grants (which is a problem for another post) and at least one of them practically screams in neon lights “THIS PI IS BLACK! DID WE MENTION BLACK? LIKE REALLY REALLY BLACK!”. As we all know, there are not 33% of applications to the NIH from African-American investigators. Any experienced reviewer would be at risk of noticing something is a bit off. The authors say nay.

A skeptic of our findings might put forward two criticisms: .. As for the second criticism, we put in place careful procedures to screen out reviewers who may have detected our manipulation, and our results were highly robust even to the most conservative of reviewer exclusion criteria.

As far as I can tell their “careful procedures” included only:

We eliminated from analysis 34 of these reviewers who either mentioned that they learned that one of the named personnel was fictitious or who mentioned that they looked up a paper from a PI biosketch, and who were therefore likely to learn that PI names were fictitious.

“who mentioned”.

There was some debriefing which included:

reviewers completed a short survey including a yes-or-no question about whether they had used outside resources. If they reported “yes”, they were prompted to elaborate about what resources they used in a free response box. Contrary to their instructions, 139 reviewers mentioned that they used PubMed or read articles relevant to their assigned proposals. We eliminated the 34 reviewers who either mentioned that they learned of our deception or looked up a paper in the PI’s biosketch and therefore were very likely to learn of our deception. It is ambiguous whether the remaining 105 reviewers also learned of our deception.

and

34 participants turned in reviews without contacting us to say that they noticed the deception, and yet indicated in review submissions that some of the grant personnel were fictitious.

So despite their instructions and discouraging participants from using outside materials, significant numbers of them did. And reviewers turned in reviews without saying they were on to the deception when they clearly were. And the authors did not, apparently, debrief in a way that could definitively say whether all, most or few reviewers were on to their true purpose. Nor does there appear to be any mention of asking reviewers afterwards of whether they knew about Ginther, specifically, or disparate grant award outcomes in general terms. That would seem to be important.

Why? Because if you tell most normal decent people that they are to review applications to see if they are biased against black PIs they are going to fight as hard as they can to show that they are not a bigot. The Ginther finding was met with huge and consistent protestation on the part of experienced reviewers that it must be wrong because they themselves were not consciously biased against black PIs and they had never noticed any overt bias during their many rounds of study section. The authors clearly know this. And yet they did not show that the study participants were not on to them. While using those rather interesting names to generate the impression of ethnicity.

The authors make several comments throughout the pre-print about how this is a valid model of NIH grant review. They take a lot of pride in their design choices in may places. I was very struck by:

names that were most commonly associated with Black and White babies born in Massachusetts between 1974 and 1979. A person born in the 1970s would now be in their 40s, which we reasoned was a plausible age for a current Principal Investigator.

because my first thought when reading this design was “gee, most of the African-Americans that I know who have been NIH funded PIs are named things like Cynthia and Carl and Maury and Mike and Jean and…..dude something is wrong here.“. Buuuut, maybe this is just me and I do know of one “Yasmin” and one “Chanda” so maybe this is a perceptual bias on my part. Okay, over to RePORTER to search out the first names. I’ll check all time and for now ignore F- and K-mechs because Ginther focused on research awards, iirc. Darnell (4, none with the last names the authors used); LaTonya (1, ditto); LaToya (2, one with middle / maiden? name of Jones, we’ll allow that and oh, she’s non-contact MultiPI); Tyrone (6; man one of these had so many awards I just had to google and..well, not sure but….) and Tanisha (1, again, not a president surname).

This brings me to “Jamal”. I’m sorry but in science when you see a Jamal you do not think of a black man. And sure enough RePORTER finds a number of PIs named Jamal but their surnames are things like Baig, Farooqui, Ibdah and Islam. Not US Presidents. Some debriefing here to ensure that reviewers presumed “Jamal” was black would seem to be critical but, in any case, it furthers the suspicion that these first names do not map onto typical NIH funded African-Americans. This brings us to the further observation that first names may convey not merely ethnicity but something about subcategories within this subpopulation of the US. It could be that these names cause percepts bound up in geography, age cohort, socioeconomic status and a host of other things. How are they controlling for that? The authors make no mention that I saw.

The authors take pains to brag on their clever deep thinking on using an age range that would correspond to PIs in their 40s (wait, actually 35-40, if the funding of the project in -02 claim is accurate, when the average age of first major NIH award is 42?) to select the names and then they didn’t even bother to see if these names appeared on the NIH database of funded awards?

The takeaway for today is that the study validity rests on the reviewers not knowing the true purpose. And yet they showed that reviewers did not follow their instructions for avoiding outside research and that reviewers did not necessarily volunteer that they’d detected the name deception*** and yet some of them clearly had. Combine this with the nature of how the study created the impression of PI ethnicity via these particular first names and I think this can be considered a fatal flaw in the study.

__

Race, Ethnicity, and NIH Research Awards, Donna K. Ginther, Walter T. Schaffer, Joshua Schnell, Beth Masimore, Faye Liu, Laurel L. Haak, Raynard Kington. Science 19 Aug 2011:Vol. 333, Issue 6045, pp. 1015-1019

DOI: 10.1126/science.1196783

*Notice the late September original funding date combined with the June 30 end date for subsequent years? This almost certainly means it was an end of year pickup** of something that did not score well enough for regular funding. I would love to see the summary statement.

**Given that this is a “Transformative” award, it is not impossible that they save these up for the end of the year to decide. So I could be off base here.

*** As a bit of a sidebar there was a twitter person who claimed to have been a reviewer in this study and found a Biosketch from a supposedly female PI referring to a sick wife. Maybe the authors intended this but it sure smells like sloppy construction of their materials. What other tells were left? And if they *did* intend to bring in LBTQ assumptions…well this just seems like throwing random variables into the mix to add noise.

DISCLAIMER: As per usual I encourage you to read my posts on NIH grant matters with the recognition that I am an interested party. The nature of NIH grant review is of specific professional interest to me and to people who are personally and professionally close to me.

Someone on the twitts posted an objection:

to UCSD’s policy of requiring applicants for faculty positions to supply a Statement of Contribution to Diversity with their application.

Mark J Perry linked to his own blog piece posted at the American Enterprise Institute* with the following observation:

All applicants for faculty positions at UCSD now required to submit a Contribution to Diversity Statement (aka Ideological Conformity Statements/Pledge of Allegiance to Left-Liberal Orthodoxy Statements)

Then some other twitter person chimed in with opinion on how this policy was unfair because it was so difficult for him to help his postdocs students with it.

Huh? A simple google search lands us on UCSD’s page on this topic.

The Contributions to Diversity Statement should describe your past efforts, as well as future plans to advance diversity, equity and inclusion. It should demonstrate an understanding of the barriers facing women and underrepresented minorities and of UC San Diego’s mission to meet the educational needs of our diverse student population.

The page has links to a full set of guidelines [PDF] as well as specific examples in Biology, Engineering and Physical Sciences (hmm, I wonder if these are the disciplines they find need the most help?). I took a look at the guidelines and examples. It’s pretty easy sailing. Sorry, but any PI who is complaining that they cannot help their postdocs figure out how to write the required statement are lying being disingenuous. What they really mean is that they disagree with having to prepare such a statement at all.

Like this guy Bieniasz, points for honesty:

I am particularly perplexed with this assertion that “The UCSD statement instructions (Part A) read like a test of opinions/ideology. Not appropriate for a faculty application“.

Ok, so is it a test of opinion/ideology? Let’s go to the guidelines provided by UCSD.

Describe your understanding of the barriers that exist for historically under-represented groups in higher education and/or your field. This may be evidenced by personal experience and educational background. For purposes of evaluating contributions to diversity, under-represented groups (URGs) includes under-represented ethnic or racial minorities (URM), women, LGBTQ, first-generation college, people with disabilities, and people from underprivileged backgrounds.

Pretty simple. Are you able to understand facts that have been well established in academia? This only asks you to describe your understanding. That’s it. If you are not aware of any of these barriers *cough*Ginther*cough*cough*, you are deficient as a candidate for a position as a University professor.

So the first part of this is merely asking if the candidate is aware of things about academia that are incredibly well documented. Facts. These are sort of important for Professors and any University is well within it’s rights to probe factual knowledge. This part does not ask anything about causes or solutions.

Now the other parts do ask you about your past activities and future plans to contribute to diversity and equity. Significantly, it starts with this friendly acceptance: “Some faculty candidates may not have substantial past activities. If such cases, we recommend focusing on future plans in your statement.“. See? It isn’t a rule-out type of thing, it allows for candidates to realize their deficits right now and to make a statement about what they might do in the future.

Let’s stop right there. This is not different in any way to the other major components of a professorial hire application package. For most of my audience, the “evidence of teaching experience and philosophy” is probably the more understandable example. Many postdocs with excellent science chops have pretty minimal teaching experience. Is it somehow unfair to ask them about their experience and philosophy? To give credit for those with experience and to ask those without to have at least thought about what they might do as a future professor?

Is it “liberal orthodoxy” if a person who insists that teaching is a waste of time and gets in the way of their real purpose (research) gets pushed downward on the priority list for the job?

What about service? Is it rude to ask a candidate for evidence of service to their Institutions and academic societies?

Is it unfair to prioritize candidates with a more complete record of accomplishment than those without? Of course it is fair.

What about scientific discipline, subfield, research orientations and theoretical underpinnings? Totally okay to ask candidates about these things.

Are those somehow “loyalty pledges”? or a requirement to “conform to orthodoxy”?

If they are, then we’ve been doing that in the academy a fair bit with nary a peep from these right wing think tank types.

__

*”Mark J. Perry is concurrently a scholar at AEI and a professor of economics and finance at the University of Michigan’s Flint campus.” This is a political opinion-making “think-tank” so take that into consideration.

Grant Supplements and Diversity Efforts

November 18, 2016

The NIH announced an “encouragement” for NIMH BRAINI PIs to apply for the availability of Research Supplements to Promote Diversity in Health-Related Research (Admin Supp).

Administrative supplements for those who are unaware, are extra amounts of money awarded to an existing NIH grant. These are not reviewed by peer reviewers in a competitive manner. The decision lies entirely with Program Staff*. The Diversity supplement program in my experience and understanding amounts to a fellowship- i.e., mostly just salary support – for a qualifying trainee. (Blog note: Federal rules on underrepresentation apply….this thread will not be a place to argue about who is properly considered an underrepresented individual, btw.) The BRANI-directed the encouragement lays out the intent:

The NIH diversity supplement program offers an opportunity for existing BRAIN awardees to request additional funds to train and mentor the next generation of researchers from underrepresented groups who will contribute to advancing the goals of the BRAIN Initiative. Program Directors/Principal Investigators (PDs/PIs) of active BRAIN Initiative research program grants are thus encouraged to identify individuals from groups nationally underrepresented to support and mentor under the auspices of the administrative supplement program to promote diversity. Individuals from the identified groups are eligible throughout the continuum from high school to the faculty level. The activities proposed in the supplement application must fall within the scope of the parent grant, and both advance the objectives of the parent grant and support the research training and professional development of the supplement candidate. BRAIN Initiative PDs/PIs are strongly encouraged to incorporate research education activities that will help prepare the supplement candidate to conduct rigorous research relevant to the goals of the BRAIN Initiative

I’ll let you read PA-16-288 for the details but we’re going to talk generally about the Administrative Supplement process so it is worth reprinting this bit:

Administrative supplement, the funding mechanism being used to support this program, can be used to cover cost increases that are associated with achieving certain new research objectives, as long as the research objectives are within the original scope of the peer reviewed and approved project, or the cost increases are for unanticipated expenses within the original scope of the project. Any cost increases need to result from making modifications to the project that would increase or preserve the overall impact of the project consistent with its originally approved objectives and purposes.

Administrative supplements come in at least three varieties, in my limited experience. [N.b. You can troll RePORTER for supplements using “S1” or “S2” in the right hand field for the Project Number / Activity Code search limiter. Unfortunately I don’t think you get much info on what the supplement itself is for.] The support for underrepresented trainees is but one category. There are also topic-directed FOAs that are issued now and again because a given I or C wishes to quickly spin up research on some topic or other. Sex differences. Emerging health threats. Etc. Finally, there are those one might categorize within the “unanticipated expenses” and “increase or preserve the overall impact of the project” clauses in the block I’ve quoted above.

I first became aware of the Administrative Supplement in this last context. I was OUTRAGED, let me tell you. It seemed to be a way by which the well-connected and highly-established use their pet POs to enrich their programs beyond what they already had via competition. Some certain big labs seemed to be constantly supplemented on one award or other. Me, I sure had “unanticipated expenses” when I was just getting started. I had plenty of things that I could have used a few extra modules of cash to pay for to enhance the impact of my projects. I did not have any POs looking to hand me any supplements unasked and when I hinted very strongly** about my woes there was no help to be had***. I did not like administrative supplements as practiced one bit. Nevertheless, I was young and still believed in the process. I believed that I needn’t pursue the supplement avenue too hard because I was going to survive into the mid career stretch and just write competing apps for what I needed. God, I was naive.

Perhaps. Perhaps if I’d fought harder for supplements they would have been awarded. Or maybe not.

When I became aware of the diversity supplements, I became an instant fan. This was much more palatable. It meant that at any time a funded PI found a likely URM recruit to science, they could get the support within about 6 weeks. Great for summer research experiences for undergrads, great for unanticipated postdocs. This still seems like a very good thing to me. Good for the prospective trainees. Good for diversity-in-science goals.

The trouble is that from the perspective of the PIs in the audience, this is just another rich-get-richer scheme whereby free labor is added to the laboratory accounts of the already advantaged “haves” of the NIH game. Salary is freed up on the research grants to spend on more toys, reagents or yet another postdoc. This mechanism is only available to a PI who has research grant funding that has a year or more left to run. Since it remains an administrative decision it is also subject to buddy-buddy PI/PO relationship bias. Now, do note that I have always heard from POs in my ICs of closest concern that they “don’t expend all the funds allocated” for these URM supplements. I don’t know what to make of that but I wouldn’t be surprised in the least if any PI with a qualified award, who asks for support of a qualified individual gets one. That would take the buddy/buddy part out of the equation for this particular type of administrative supplement.

It took awhile for me to become aware of the FOA version of the administrative supplement whereby Program was basically issuing a cut-rate RFA. The rich still get richer but at least there is a call for open competition. Not like the first variety I discussed whereby it seems like only some PIs, but not others, are even told by the PO that a supplement might be available. This seems slightly fairer to me although again, you have to be in the funded-PI club already to take advantage

There are sometimes competing versions of the FOA for a topic-based supplement issued as well. In one case I am familiar with, both types were issued simultaneously. I happen to know quite a bit about that particular scenario and it was interesting to see the competing variety actually were quite bad. I wished I’d gone in for the competing ones instead of the administrative variety****, let me tell you.

The primary advantage of the administrative supplement to Program, in my viewing, is that it is fast. No need to wait for the grant review cycle. These and the competing supplements are also cheap and can be efficient, because of leverage from the activities and capabilities under the already funded award.

As per usual, I have three main goals with this post. First, if you are an underrepresented minority trainee it is good to be aware of this. Not all PIs are and not all think about it. Not to mention they don’t necessarily know if you qualify for one of these. I’d suggest bringing it up in conversations with a prospective lab you wish to join. Second, if you are a noob PI I encourage you to be aware of the supplement process and to take advantage of it as you might.

Finally, DearReader, I turn to you and your views on Administrative Supplements. Good? Bad? OUTRAGE?

__

COI DISCLAIMER: I’ve benefited from administrative supplements under each of the three main categories I’ve outlined and I would certainly not turn up my nose at any additional ones in the future.

*I suppose it is not impossible that in some cases outside input is solicited.

**complained vociferously

***I have had a few enraging conversations long after the fact with POs who said things like “Why didn’t you ask for help?” in the wake of some medium sized disaster with my research program. I keep to myself the fact that I did, and nobody was willing to go to bat for me until it was too late but…whatevs.

****I managed to get all the way to here without emphasizing that even for the administrative supplements you have to prepare an application. It might not be as extensive as your typical competing application but it is much more onerous than Progress Report. Research supplements look like research grants. Fellowship-like supplements look like fellowships complete with training plan.

Bias at work

April 12, 2016

A piece in Vox summarizes a study from Nextions showing that lawyers are more critical of a brief written by an African-American.

I immediately thought of scientific manuscript review and the not-unusual request to have a revision “thoroughly edited by a native English speaker”. My confirmation bias suggests that this is way more common when the first author has an apparently Asian surname.

It would be interesting to see a similar balanced test for scientific writing and review, wouldn’t it?

My second thought was…. Ginther. Is this not another one of the thousand cuts contributing to African-American PIs’ lower success rates and need to revise the proposal extra times? Seems as though it might be.

Service contributions of faculty

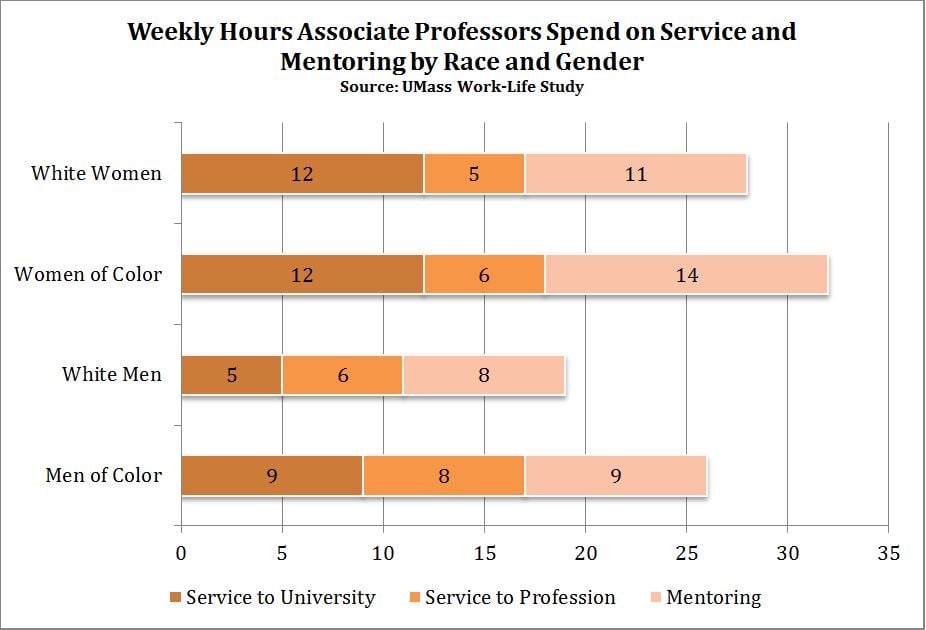

March 22, 2016

Just remember this graph when you are being told about the service requirements of your job and how “good it will look to the P&T committee” if you say yes to the next demand on your time.

In fact, you know what? Just go ahead and print this out and slip it under your Chair’s door.

via:

Diversity and the Ivory Ceiling by

Joya Misra and Jennifer Lundquist at Inside Higher Ed.

On whitening the CV

March 18, 2016

I heard yet another news story* recently about the beneficial effects of whitening the resume for job seekers.

I wasn’t paying close attention so I don’t know the specific context.

But suffice it to say, minority job applicants have been found (in studies) to get more call-backs for job interviews when the evidence of their non-whiteness on their resume is minimized, concealed or eradicated.

Should academic trainees and job seekers do the same?

It goes beyond using only your initials if your first name is stereotypically associated with, for example, being African-Anerican. Or using an Americanized nickname to try to communicate that you are highly assimilated Asian-Anerican.

The CV usually includes awards, listed by foundation or specific award title. “Ford Foundation” or “travel award for minority scholars” or similar can give a pretty good clue. But you cannot omit those! The awards, particularly the all-important “evidence of being competitively funded”, are a key part of a trainee’s CV.

I don’t know how common it is, but I do have one colleague (I.e., professorial rank at this point) for whom a couple of those training awards were the only clear evidence on the CV of being nonwhite. This person stopped listing these items and/or changed how they were listed to minimize detection. So it happens.

Here’s the rub.

I come at this from the perspective of one who doesn’t think he is biased against minority trainees and wants to know if prospective postdocs, graduate students or undergrads are of Federally recognized underrepresented status.

Why?

Because it changes the ability of my lab to afford them. NIH has this supplement program to fund underrepresented trainees. There are other sources of support as well.

This changes whether I can take someone into my lab. So if I’m full up and I get an unsolicited email+CV I’m more likely to look at it if it is from an individual that qualifies for a novel funding source.

Naturally, the applicant can’t know in any given situation** if they are facing implicit bias against, or my explicit bias for, their underrepresentedness.

So I can’t say I have any clear advice on whitening up the academic CV.

__

*probably Kang et al.

**Kang et al caution that institutional pro-diversity statements are not associated with increased call-backs or any minimization of the bias.

NSF Graduate Fellowship changes benefit…..?

March 8, 2016

I was wondering about the impact of the recent change in NSF rules about applying for their much desired fellowship for graduate training. Two blog posts are of help.

Go read:

I asked about your experiences with the transition from Master’s programs to PhD granting programs because of the NIH Bridges to the Doctorate (R25) Program Announcement. PAR-16-109 has the goal:

… to support educational activities that enhance the diversity of the biomedical, behavioral and clinical research workforce. . …To accomplish the stated over-arching goal, this FOA will support creative educational activities with a primary focus on Courses for Skills Development and Research Experiences.

Good stuff. Why is it needed?

Underrepresentation of certain groups in science, technology and engineering fields increases throughout the training stages. For example, students from certain racial and ethnic groups including, Blacks or African Americans, Hispanics or Latinos, American Indians or Alaska Natives, Native Hawaiians and other Pacific Islanders, currently comprise ~39% of the college age population (Census Bureau), but earn only ~17% of bachelor’s degrees and ~7% of the Ph.D.’s in the biological sciences (NSF, 2015). Active interventions are required to prevent the loss of talent at each level of educational advancement. For example, a report from the President’s Council of Advisors on Science and Technology recommended support of programs to retain underrepresented undergraduate science, technology, engineering and math students as a means to effectively build a diverse and competitive scientific workforce (PCAST Report, 2012).

[I actually took this blurb from the related PAR-16-118 because it had the links]

And how is this Bridges to the Doctorate supposed to work?

The Bridges to Doctorate Program is intended to provide these activities to master’s level students to increase transition to and completion of PhDs in biomedical sciences. This program requires partnerships between master’s degree-granting institutions with doctorate degree-granting institutions.

Emphasis added. Oh Boy.

God forbid we have a NIH program that doesn’t ensure that in some way the rich, already heavily NIH-funded institutions get a piece of the action.

So what is it really supposed to be funding? Well first off you will note it is pretty substantial “Application budgets are limited to $300,000 direct costs per year.The maximum project period is 5 years“. Better than a full-modular R01! (Some of y’all will also be happy to note that it only comes with 8% overhead.) It is not supposed to fully replace NRSA individual fellowships or training grants.

Research education programs may complement ongoing research training and education occurring at the applicant institution, but the proposed educational experiences must be distinct from those training and education programs currently receiving Federal support. R25 programs may augment institutional research training programs (e.g., T32, T90) but cannot be used to replace or circumvent Ruth L. Kirschstein National Research Service Award (NRSA) programs.

That is less than full support to prospective trainees to be bridged. So what are the funds for?

Salary support for program administration, namely, the PDs/PIs, program coordinator(s), or administrative/clerical support is limited to 30% of the total direct costs annually.

Nice.

Support for faculty from the doctoral institution serving as visiting lecturers, offering lectures and/or laboratory courses for skills development in areas in which expertise needs strengthening at the master’s institution;

Support for faculty from the master’s degree-granting institution for developing or implementing special academic developmental activities for skills development; and

Support for faculty/consultants/role models (including Bridges alumni) to present research seminars and workshops on communication skills, grant writing, and career development plans at the master’s degree institution(s).

Cha-ching! One element for the lesser-light Master’s U, one element for the Ivory Tower faculty and one slippery slope that will mostly be the Ivory Tower faculty, I bet. Woot! Two to one for the Big Dogs!

Just look at this specific goal… who is going to be teaching this, can we ask? The folks from the R1 partner-U, that’s who. Who can be paid directly, see above, for the effort.

1. Courses for Skills Development: For example, advanced courses in a specific discipline or research area, or specialized research techniques.

Oh White Man’s R1 Professor’s Burden!

Ahem.

Okay, now we get to the Master’s level student who wishes to benefit. What is in it for her?

2. Research Experiences: For example, for graduate and medical, dental, nursing and other health professional students: to provide research experiences and related training not available through formal NIH training mechanisms; for postdoctorates, medical residents and faculty: to extend their skills, experiences, and knowledge base.

Yeah! Sounds great. Let’s get to it.

Participants may be paid if specifically required for the proposed research education program and sufficiently justified.

“May”. May? MAY???? Wtf!??? Okay if this is setting up unpaid undergraduate style “research experience” scams, I am not happy. At all. This better not be what occurs. Underrepresented folks are disproportionally unlikely to be able to financially afford such shenanigans. PAY THEM!

Applicants may request Bridges student participant support for up to 20 hours per week during the academic year, and up to 40 hours/week during the summer at a pay rate that is consistent with the institutional pay scale.

That’s better. And the pivot of this program, if I can find anything at all to like about it. Master’s students can start working in the presumably higher-falutin’ laboratories of the R1 partner and do so for regular pay, hopefully commensurate with what that University is paying their doctoral graduate students.

So here’s what annoys me about this. Sure, it looks good on the surface. But think about it. This is just another way for fabulously well funded R1 University faculty to get even more cheap labor that they don’t have to pay for from their own grants. 20 hrs a week during the school year and 40 hrs a week during the summer? From a Master’s level person bucking to get into a doctoral program?

Sign me the heck UP, sister.

Call me crazy but wouldn’t it be better just to lard up the underrepresented-group-serving University with the cash? Wouldn’t it be better to give them infrastructure grants, and buy the faculty out of teaching time to do more research? Wouldn’t it be better to just fund a bunch more research grants to these faculty at URGSUs? (I just made that up).

Wouldn’t it be better to fund PIs from those underrepresented groups at rates equal to good old straight whitey old men at the doctoral granting institution so that the URM “participants” (why aren’t they trainees in the PAR?) would have somebody to look at and think maybe they can succeed too?

But no. We can’t just hand over the cash to the URGSUs without giving the already-well-funded University their taste. The world might end if NIH did something silly like that.