Why do we participate in manuscript review?

December 21, 2020

Why indeed.

I have several motivations, deployed variably and therefore, my answers to his question about a journal-less world vary.

First and foremost I review manuscripts as a reciprocal professional obligation, motivated by the desire I have to get my papers published. It is distasteful free-rider behavior to not review at least as often as you require the field to review for you. That is, approximately 3 times your number of unique-journal submissions. Should we ever move to a point where I do not expect any such review of my work to be necessary, then this prime motivator goes to zero. So, “none”.

The least palatable (to me) motivation is the gatekeeper motivation. I do hope this is the rarest of reviews that I write. Gatekeeper motivation leads to reviews that try really hard to get the editor to reject the manuscript or to persuade the authors that this really should not be presented to the public in anything conceivably related to current form. In my recollection, this is because it is too slim for even my rather expansive views on “least publishable unit” or because there is some really bad interpretation or experimental design going on. In a world where these works appeared in pre-print, I think I would be mostly unmotivated to supply my thoughts in public. Mostly because I think this would just be obvious to anyone in the field and therefore what is the point of me posturing around on some biorxiv comment field about how smart I was to notice it.

In the middle of this space I have the motivation to try to improve the presentation of work that I have an interest in. The most fun papers to review for me are, of course, the ones directly related to my professional interests. For the most part, I am motivated to see at least some part of the work in print. I hope my critical comments are mostly in the nature of

“you need to rein back your expansive claims” and only much less often in the vein of “you need to do more work on what I would wish to see next”. I hate those when we get them and I hope I only rarely make them.

This latter motivation is, I expect, the one that would most drive me to make comments in a journal-less world. I am not sure that I would do much of this and the entirely obvious sources of bias in go/no-go make it even more likely that I wouldn’t comment. Look, there isn’t much value in a bunch of congratulatory comments on a scientific paper. The value is in critique and in drawing together a series of implications for our knowledge on the topic at hand. This latter is what review articles are for, and I am not personally big into those. So that wouldn’t motivate me. Critique? What’s the value? In pre-publication review there is some chance that this critique will result in changes where it counts. Data re-analysis, maybe some more studies added, a more focused interpretation narrative, better contextualization of the work…etc. In post-publication review, it is much less likely to result in any changes. Maybe a few readers will notice something that they didn’t already come up with for themselves. Maybe. I don’t have the sort of arrogance that thinks I’m some sort of brilliant reader of the paper. I think people that envision some new world order where the unique brilliance of their critical reviews are made public have serious narcissism issues, frankly. I’m open to discussion on that but it is my gut response.

On the flip side of this is cost. If you don’t think the process of peer review in subfields is already fraught with tit-for-tat vengeance seeking even when it is single-blind, well, I have a Covid cure to sell you. This will motivate people not to post public, unblinded critical comments on their peers’ papers. Because they don’t want to trigger revenge behaviors. It won’t just be a tit-for-tat waged in these “overlay” journals of the future or in the comment fields of pre-print servers. Oh no. It will bleed over into all of the areas of academic science including grant review, assistant professor hiring, promotion letters, etc, etc. I appreciate that Professor Eisen has an optimistic view of human nature and believes these issues to be minor. I do not have an optimistic view of human nature and I believe these issues to be hugely motivational.

We’ve had various attempts to get online, post-publication commentary of the journal-club nature crash and burn over the years. Decades by now. The efforts die because of a lack of use. Always. People in science just don’t make public review type comments, despite the means being readily available and simple. I assure you it is not because they do not have interesting and productive views on published work. It is because they see very little positive value and a whole lot of potential harm for their careers.

How do we change this, I feel sure Professor Eisen would challenge me.

I submit to you that we first start with looking at those who are already keen to take up such commentary. Who drop their opinions on the work of colleagues at the drop of a hat with nary a care about how it will be perceived. Why do they do it?

I mean yes, narcissistic assholes, sure but that’s not the general point.

It is those who feel themselves unassailable. Those who do not fear* any real risk of their opinions triggering revenge behavior.

In short, the empowered. Tenured. HHMI funded.

So, in order to move into a glorious new world of public post-publication review of scientific works, you have to make everyone feel unassailable. As if their opinion does not have to be filtered, modulated or squelched because of potential career blow-back.

__

*Sure, there are those dumbasses who know they are at risk of revenge behavior but can’t stfu with their opinions. I don’t recommend this as an approach, based on long personal experience.

Stupid JIF tricks, take eleven

November 3, 2020

As my longer term Readers are well aware, my laboratory does not play in the Glam arena. We publish in society type journals and not usually the fancier ones, either. This is a category thing, in addition to my stubbornness. I have occasionally pointed out how my papers that were rejected summarily by the fancier society journals tend to go on to get cited better than their median and often their mean (i.e., their JIF) in the critical window where it counts. This, I will note, is at journals with only slightly better JIF than the vast herd or workmanlike journals in my fields of interest, i.e. with JIF from ~2-4.

There are a lot of journals packed into this space. For the real JIF-jockeys and certainly the Glam hounds, the difference between a JIF 2 and JIF 4 journal is imperceptible. Some are not even impressed in the JIF 5-6 zone where the herd starts to thin out a little bit.

For those of us that publish regularly in the herd, I suppose there might be some slight idea that journals towards the JIF 4-5 range is better than journals in the JIF 2-3 range. Very slight.

And if you look at who is on editorial boards, who is EIC, who is AE and who is publishing at least semi-regularly in these journals you would be hard pressed to discern any real difference.

Yet, as I’ve also often related, people associated with running these journals all seem to care. They always talk to their Editorial Boards in a pleading way to “send some of your work here”. In some cases for the slightly fancier society journals with airs, they want you to “send your best work here”….naturally they are talking here to the demiGlam and Glam hounds. Sometimes at the annual Editorial Board meeting the EIC will get more explicit about the JIF, sometimes not, but we all know what they mean.

And to put a finer point on it, the EIC often mentions specific journals that they feel they are in competition with.

Here’s what puzzles me. Aside the fact that a few very highly cited papers would jazz up the JIF for the lowly journals if the EIC or AEs or a few choice EB members were to actually take one for the team, and they never do, that is. The ONLY thing I can see that these journals can compete on are 1) rapid and easy acceptance without a lot of demands for more data (really? at JIF 2? no.) and 2) speed of publication after acceptance.

My experience over the years is that journals of interchangeable JIF levels vary widely in the speed of publication after acceptance. Some have online pre-print queues that stretch for months. In some cases, over a year. A YEAR to wait for a JIF 3 paper to come out “in print”? Ridiculous! In other cases it can be startlingly fast. As in assigned to a “print” issue within two or three months of the acceptance. That seems…..better.

So I often wonder how this system is not more dynamic and free-market-y. I would think that as the pre-print list stretches out to 4 months and beyond, people would stop submitting papers there. The journal would then have to shrink their list as the input slows down. Conversely, as a journal starts to head towards only 1/4 of an issue in the pre-print list, authors would submit there preferentially, trying to get in on the speed.

Round and round it would go but the ecosphere should be more or less in balance, long term. right?

Acknowledging undergraduate labor in academic papers

July 2, 2020

It is time. Well past time, in fact.

Time for the Acknowledgements sections of academic papers to report to report on a source of funding that is all to often forgotten.

In fact I cannot once remember seeing a paper or manuscript I have received to review mention it.

It’s not weird. Most academic journals I am familiar with do demand that authors report the source of funding. Sometimes there is an extra declaration that we have reported all sources. It’s traditional. Grants for certain sure. Gifts in kind from companies are supposed to be included as well (although I don’t know if people include special discounts on key equipment or reagents, tut, tut).

In recent times we’ve seen the NIH get all astir precisely because some individuals were not reporting funding to them that did appear in manuscripts and publications.

The statements about funding often come with some sort of comment that the funding agency or entity had no input on the content of the study or the decisions to/not publish data.

The uses of these declarations are several. Readers want to know where there are potential sources of bias, even if the authors have just asserted no such thing exists. Funding bodies rightfully want credit for what they have paid hard cash to create.

Grant peer reviewers want to know how “productive” a given award has been, for better or worse and whether they are being asked to review that information or not.

It’s common stuff.

We put in both the grants that paid for the research costs and any individual fellowships or traineeships that supported any postdocs or graduate students. We assume, of course, that any technicians have been paid a salary and are not donating their time. We assume the professor types likewise had their salary covered during the time they were working on the paper. There can be small variances but these assumptions are, for the most part, valid.

What we cannot assume is the compensation, if any, provided to any undergraduate or secondary school authors. That is because this is a much more varied reality, in my experience.

Undergraduates could be on traineeships or fellowships, just like graduate students and postdocs. Summer research programs are often compensated with a stipend and housing. There are other fellowships active during the academic year. Some students are on work-study and are paid a salary and in school related financial aid…in a good lab this can be something more advanced than mere dishwasher or cage changer.

Some students receive course credit, as their lab work is considered a part of the education that they are paying the University to receive.

Sometimes this course credit is an optional choice- something that someone can choose to do but is not absolutely required. Other times this lab work is a requirement of a Major course of study and is therefore something other than optional.

And sometimes…..

…sometimes that lab work is compensated with only the “work experience” itself. Perhaps with a letter or a verbal recommendation from a lab head.

I believe journals should extend their requirement to Acknowledge all sources of funding to the participation of any trainees who are not being compensated from a traditionally cited source, such as a traineeship. There should be lines such as:

“Author JOB participated in this research as an undergraduate course in fulfilling obligations for a Major in Psychology.“

“Author KRN volunteered in the lab for ~ 10 h a week during the 2020-2021 academic year to gain research experience.“

“Author TAD volunteered in the lab as part of a high school science fair project supported by his dad’s colleague.“

Etc.

I’m not going to go into a long song and dance as to why…I think when you consider what we do traditionally include, the onus is quickly upon us to explain why we do NOT already do this.

Can anyone think of an objection to stating the nature of the participation of students prior to the graduate school level?

Impact, in the Time of Corona

May 8, 2020

In an earlier post I touched on themes that are being kicked around the Science Twitters about how perhaps we should be easing up on the criteria for manuscript publication. It is probably most focused in the discussion of demanding additional experiments be conducted, something that is not possible for those who have shut down their laboratory operations for the Corona Virus Crisis.

I, of course, find all of this fascinating because I think in regular times, we need to be throttling back on such demands.

The reasons for such demands vary, of course. You can dress it up all you want with fine talk of “need to show the mechanism” and “need to present a complete story” and, most nebulously, “enhance the impact”. This is all nonsense. From the perspective of the peers who are doing the reviewing there are really only two perspectives.

- Competition

- Unregulated desire we all have to want to see more, more, more data if we find the topic of interest.

From the side of the journal itself, there is only one perspective and that is competitive advantage in the marketplace. The degree to which the editorial staff fall strictly on the side of the journal, strictly on the side of the peer scientists or some uncomfortable balance in between varies.

But as I’ve said before, I have had occasion to see academic editors in action and they all, at some point, get pressure to improve their impact factor. Often this is from the publisher. Sometimes, it is from the associated academic society which is grumpy about “their” journal slowly losing the cachet it used to have (real or imagined).

So, do standards having to do with the nitty-gritty of demands for more data that might be relevant to the Time of Corona slow/shut downs actually affect Impact? Is there a reason that a given journal should try to just hold on to business as usual? Or is there an argument that topicality is key, papers get cited for reasons not having to do with the extra conceits about “complete story” or “shows mechanism” and it would be better just to accept the papers if they seem to be of current interest in the field?

I’ve written at least one post in the past with the intent to:

encourage you to take a similar quantitative look at your own work if you should happen to be feeling down in the dumps after another insult directed at you by the system. This is not for external bragging, nobody gives a crap about the behind-the-curtain reality of JIF, h-index and the like. You aren’t going to convince anyone that your work is better just because it outpoints the JIF of a journal it didn’t get published in. …It’s not about that…This is about your internal dialogue and your Imposter Syndrome. If this helps, use it.

There is one thing I didn’t really explore in whingy section of that post, where I was arguing that the citations of several of my papers published elsewhere showed how stupid it was for the editors of the original venue to reject them. And it is relevant to the Time of Corona discussions.

I think a lot of my papers garner citations based on timing and topicality more than much else. For various reasons I tend to work in thinly populated sub-sub-areas where you would expect the relatively specific citations to arise. Another way to say this is that my papers are “not of general interest”, which is a subtext, or explicit reason, for many a rejection in the past. So the question is always: Will it take off?

That is, this thing that I’ve decided is of interest to me may be of interest to others in the near or distant future. If it’s in the distant future, you get to say you were ahead of the game. (This may not be all that comforting if disinterest in the now has prevented you from getting or sustaining your career. Remember that guy who was Nobel adjacent but had been driving a shuttle bus for years?) If it’s in the near future, you get to claim leadership or argue that the work you published showed others that they should get on this too. I still believe that the sort of short timeline that gets you within the JIF calculation window may be more a factor of happening to be slightly ahead of the others, rather than your papers stimulating them de novo, but you get to claim it anyway.

For any of these things does it matter that you showed mechanism or provided a complete story? Usually not. Usually it is the timing. You happened to publish first and the other authors coming along several months in your wake are forced to cite you. In the more distant, medium term then maybe do you start seeing citations of your paper from work that was truly motivated by it and depends on it. I’d say a 2+ year runway on that.

This citations, unfortunately, will come in just past the JIT window and don’t contribute to the journal’s desire to raise its impact.

I have a particular journal which I love to throw shade at because they reject my papers at a high rate and then those papers very frequently go on to beat their JIF. I.e., if they had accepted my work it would have been a net boost to their JIF….assuming the lower performing manuscripts that they did accept were rejected in favor of mine. But of course, the reason that their JIF continues to languish behind where the society and the publisher thinks it “should” be is that they are not good at predicting what will improve their JIF and what will not.

In short, their prediction of impact sucks.

Today’s musing were brought around by something slightly different which is that I happened to be reviewing a few papers that this journal did publish, in a topic domain reasonably close to mine, not particularly more “complete story” but, and I will full admit this, they do seem a little more “shows mechanism” sciency in a limited way in which my work could, I just find that particular approach to be pedantic and ultimately of lesser importance than broader strokes.

These papers are not outpointing mine. They are not being cited at rates that are significantly inflating the JIF of this journal. They are doing okay, I rush to admit. They are about the middle of the distribution for the journal and pacing some of my more middle ground offering in my whinge category. Nothing appears to be matching my handful of better ones though.

Why?

Well, one can speculate that we were on the earlier side of things. And the initial basic description (gasp) of certain facts was a higher demand item than would be a more quantitative (or otherwise sciencey-er) offering published much, much later.

One can also speculate that for imprecise reasons our work was of broader interest in the sense that we covered a few distinct sub-sub-sub field approaches (models, techniques, that sort of thing) instead of one, thereby broadening the reach of the single paper.

I think this is relevant to the Time of Corona and the slackening of demands for more data upon initial peer review. I just don’t think in the balance, it is a good idea for journals to hold the line. Far better to get topical stuff out there sooner rather than later. To try to ride the coming wave instead of playing catchup with “higher quality” work. Because for the level of journal I am talking about, they do not see the truly breathtakingly novel stuff. They just don’t. They see workmanlike good science. And if they don’t accept the paper, another journal will quite quickly.

And then the fish that got away will be racking up JIF points for that other journal.

This also applies to authors, btw. I mean sure, we are often subject to evaluation based on the journal identity and JIF rather than the actual citations to our papers. Why do you think I waste my time submitting work to this one? But there is another game at foot as well and that game does depend on individual paper citations. Which are benefited by getting that paper published and in front of people as quickly as possible. It’s not an easy calculation. But I think that in the Time of Corona you should probably shift your needle slightly in the “publish it now” direction.

Reading the literature and writing

April 20, 2020

Well respected addiction neuroscientist Nick Gilpin posted a twitter poll asking people about their favorite part of doing science.

Only 4% of the respondents voted for “Reading the literature”. Now look, it’s just a dumb little twitter poll and it was a forced choice about a person’s favorite. Maybe reading is a super close second place for everyone who responded with something else as their first choice, I don’t know.

However. Experience in this field, reviewing manuscripts submitted to journals, reading manuscripts published in journals, fielding comments from reviewers about our manuscripts and trying to help trainees learn to write a scientific manuscript suggests to me that it is more than this.

I think a lot of scientists really don’t like to read deeply into the literature. At best, perhaps they weren’t ever trained to read deeply.

As a mentor, I tip toe around this issue a lot more than I should. I think, I guess, that it would be sort of insulting to ask a postdoc if they even know how to read deeply into a literature for the purposes of writing up scientific results. So I take the hint approach. I take the personal example approach. Even my direct instruction is a little indirect.

The personal example approach has a clear failure point. The hint may suffer from that somewhat as well. The direct statements of instruction that I do manage to give “Hey, we need to look into this set of issues so we know what to write” is only slightly better. The failure point I am talking about is that part of reading deeply into the literature is a triage process.

The triage process is one of elimination. Of looking at a paper that might, from the title or eve the Abstract, be relevant. In the vast majority of cases you are going to quickly figure out that it is not something that contributes to the knowledge under discussion in this particular paper. To me this is under the heading of reading the literature. It doesn’t mean you read every paper word for word, at all. Maybe this is part of the problem for some people? That they think it really means read every frigging search result from start to finish? I can see how that would be daunting.

But it IS work. It takes many hours, at times, of searching through PubMed, Google Scholar or Web of Science using several variant key word searches. Of then scanning papers as needed to see if they have relevant information. Sometimes you can triage based on the abstract. Sure. But a lot of the time you have to download the paper and take look through it. Sometimes, you are just checking for a detail that didn’t make it into the abstract and finding that, nope, the paper isn’t relevant. But sometimes it is and you have to read it. But then, maybe it would be a good idea to look at the citations and follow the threads to additional papers. Maybe you should use Web of Science to find out what subsequent papers cited that particular paper. All of this work to come up with three or two or even one sentence with three surviving citations.

The person who is following my writing by example doesn’t necessarily see this. They may think I just pulled three cites at random and kept on going. They may think that somehow it is my vast experience that has all of this literature in my head all at the same time. Despite me saying more or less the above as reminders. When I say things like “hey, everybody works differently, but when I do a PubMed search on a topic, I like to start with the oldest citations and work forward from there”, I mean this as a hint that they should actually do PubMed searches on topic terms.

(I admit in early graduate school I was really intimidated by the perception that the Professors did have encyclopedic knowledge of everything, all of the time. I don’t think that any more. I mean, yes, my colleagues over the years clearly vary and some are incredibly good at knowing the literature. But some are just highly specific in their knowledge and if you get too far outside they flounder. Some know lots of key papers, sure, but if you REALLY are digging in deeply, you figure out they don’t know everything. Not the way a grad student on dissertation defense day should.)

So I’m not talking about reading the literature in a “keep up with the latest TOCs for all the relevant journals” kind of way. I’m talking about focused reading when you want to answer a question for yourself and to put it into some sort of scholarly setting, like a Discussion.

I have no recollection of being taught to read a literature. I just sort of DID that as a graduate student. I was in the kind of lab where you were expected to really develop your own ideas almost from whole cloth, rather than fitting into an existing program of work. Some of my fellow grad students were in labs more like mine, some were in labs where they fit into existing programs. So it wasn’t the grad program itself, just an accident of the training lab. Still, it wasn’t as though I chatted much with my fellow students about something like this so I have no idea how they were trained to read the literature. I have no recollection of how much time anyone spent in the library (oh yes, children, this was before ready access to PDFs from your desktop) compared with me.

So about this poll that Professor Gilpin put up. How about you, Dear Reader? How do you feel about reading the literature? Were you taught how to do it? Especially as it pertains to writing papers that reference said literature (as opposed to reading to guide your experiments in the first place, another topic for another day). Have you tried to explicitly train your mentees to do so or do they just pick it up? Is it always the case that reviewers of your manuscripts hand wavily suggest you have overlooked some key literature and they are right? or is it the case that you know all about what they mean and have triaged it as not relevant? Or you know that what they think “surely must exist” really doesn’t?

BJP issues new policy on SABV

September 4, 2019

The British Journal of Pharmacology has been issuing a barrage of initiatives over the past few years that are intended to address numerous issues of scientific meta-concern including reproducibility, reliability and transparency of methods. The latest is an Editorial on how they will address current concerns about including sex as a biological variable.

Docherty et al. 2019 Sex: A change in our guidelines to authors to ensure that this is no longer an ignored experimental variable. https://doi.org/10.1111/bph.14761 [link]

I’ll skip over the blah-blah about why. This audience is up to speed on SABV issues. The critical parts are what they plan to do about it, with respect to future manuscripts submitted to their journal. tldr: They are going to shake the finger but fall woefully short of heavy threats or of prioritizing manuscripts that do a good job of inclusion.

From Section 4 BJP Policy: The British Journal of Pharmacology has decided to rectify this neglect of sex as a research variable, and we recommend that all future studies published in this journal should acknowledge consideration of the issue of sex. In the ideal scenario for in vivo studies, both sexes will be included in the experimental design. However, if the researcher’s view is that sex or gender is not relevant to the experimental question, then a statement providing a rationale for this view will be required.

Right? Already we see immense weaseling. What rationales will be acceptable? Will those rationales be applied consistently for all submissions? Or will this be yet another frustrating feature for authors in which our manuscripts appear to be rejected on grounds that other papers published seem to suffer from?

We acknowledge that the economics of investigating the influence of sex on experimental outcomes will be difficult until research grant‐funding agencies insist that researchers adapt their experimental designs, in order to accommodate sex as an experimental variable and provide the necessary resources. In the meantime, manuscripts based on studies that have used only one sex or gender will continue to be published in BJP. However, we will require authors to include a statement to justify a decision to study only one sex or gender.

Oh a statement. You know, the NIH has (sort of, weaselly) “insisted”. But as we know the research force is fighting back, insisting that we don’t have “necessary resources” and, several years into this policy, researchers are blithely presenting data at conferences with no mention of addressing SABV.

Overall sex differences and, more importantly, interactions between experimental interventions and sex (i.e., the effect of the intervention differs in the two sexes) cannot be inferred if males and females are studied in separate time frames.

Absolutely totally false. False, false, false. This has come up in more than one of my recent reviews and it is completely and utterly, hypocritically wrong. Why? Several reasons. First of all in my fields of study it is exceptionally rare that large, multi-group, multi-sub-study designs (in single sex) are conducted this way. It is resource intensive and generally unworkable. Many, many, many studies include comparisons across groups that were not run at the same time in some sort of cohort balancing design. And whaddaya know those studies often replicate with all sorts of variation across labs, not just across time within lab. In fact this is a strength. Second, in my fields of study, we refer to prior literature all the time in our Discussion sections to draw parallels and contrasts. In essentially zero cases do the authors simply throw up their hands and say “well since nobody has run studies at the same time and place as ours there is nothing worth saying about that prior literature”. You would be rightfully laughed out of town.

Third concern: It’s my old saw about “too many notes“. Critique without an actual reason is bullshit. In this case you have to say why you think the factor you don’t happen to like for Experimental Design 101 reasons (running studies in series instead of parallel) has contributed to the difference. If one of my peer labs says they did more or less the same methods this month compared to last year compared to five years ago…wherein lies the source of non-sex-related variance which explains why the female group self-administered more cocaine compared with the before, after and in between male groups which all did the same thing? And why are we so insistent about this for SABV and not for the series of studies in males that reference each other?

In conscious animal experiments, a potential confounder is that the response of interest might be affected by the close proximity of an animal of the opposite sex. We have no specific recommendation on how to deal with this, and it should be borne in mind that this situation will replicate their “real world.” We ask authors merely to consider whether or not males and females should be physically separated, to ensure that sight and smell are not an issue that could confound the results, and to report on how this was addressed when carrying out the study. Obviously, it would not be advisable to house males and females in different rooms because that would undermine the need for the animals to be exposed to the same environmental factors in a properly controlled experiment.

NO SHIT SHERLOCK!

Look, there are tradeoffs in this SABV business when it comes to behavior studies, and no doubt others. We have many sources of potential variance that could be misinterpreted as a relatively pure sex difference. We cannot address them all in each and every design. We can’t. You would have to run groups that were housed together, and not, in rooms together and not, at times similar and apart AND MULTIPLY THAT AGAINST EACH AND EVERY TREATMENT CONDITION YOU HAVE PLANNED FOR THE “REAL” STUDY.

Unless the objective of the study is specifically to investigate drug‐induced responses at specific stages of the oestrous cycle, we shall not require authors to record or report this information in this journal. This is not least because procedures to determine oestrous status are moderately stressful and an interaction between the stress response and stage of the oestrous cycle could affect the experimental outcome. However, authors should be aware that the stage of the oestrous cycle may affect response to drugs particularly in behavioural studies, as reported for actions of cocaine in rats and mice (Calipari et al., 2017; Nicolas et al., 2019).

Well done. Except why cite papers where there are oestrous differences without similarly citing cases where there are no oestrous differences? It sets up a bias that has the potential to undercut the more correct way they start Section 5.5.

My concern with all of this is not the general support for SABV. I like that. I am concerned first that it will be toothless in the sense that studies which include SABV will not be prioritized and some, not all, authors will be allowed to get away with thin rationales. This is not unique to BJP, I suspect the NIH is failing hard at this as well. And without incentives (easier acceptance of manuscripts, better grant odds) or punishments (auto rejects, grant triages) then behavior won’t change because the other incentives (faster movement on “real” effects and designs) will dominate.

Wait, HOW is the JIF calculated?

April 29, 2019

I still don’t understand the calculation of Journal Impact Factor. Or, I didn’t until today. Not completely. I mean, yes, I had the basic idea that it was citations divided by the number of citable articles published in the past two years. However, when I write blog posts talking about how you should evaluate your own articles in the context (e.g., this one), I didn’t get it quite right. The definition from the source:

the impact factor of a journal is calculated by dividing the number of current year citations to the source items published in that journal during the previous two years

So when we assess how our own article contributes to the journal impact factor of the journal it was published in, we need to look at citations in the second and third calendar years. It will never count the first calendar year of publication, somewhat getting around the question of whether something has been available to be seen and cited for a full calendar year before it “counts” for JIF purposes. So when I wrote:

The fun game is to take a look at the articles that you’ve had rejected at a given journal (particularly when rejection was on impact grounds) but subsequently published elsewhere. You can take your citations in the “JCR” (aka second) year of the two years after it was published and match that up with the citation distribution of the journal that originally rejected your work. In the past, if you met the JIF number, you could be satisfied they blew it and that your article indeed had impact worthy of their journal. Now you can take it a step farther because you can get a better idea of when your article beat the median. Even if your actual citations are below the JIF of the journal that rejected you, your article may have been one that would have boosted their JIF by beating the median.

I don’t think I fully appreciated that you can look at citations in the second and third year and totally ignore the first year of citations. Look at the second and third calendar year of citations, individually, or average them together as a short cut. Either way, if you want to know if your paper is boosting the JIF of the journal, those are the citations to focus on. Certainly in my mind when I do the below mentioned analysis I used to think I had to look at the first year and sort of grumble to myself about how it wasn’t fair, it was published in the second half of the year, etc. And the second year “really counted”. Well, I was actually closer with my prior excuse making than I realized. You look at the second and third years.

Obviously this also applies to the axe grinding part of your analysis of your papers. I was speaking with two colleagues recently, different details but basically it boils down to being a little down in the dumps about academic disrespect. As you know Dear Reader one of the things that I detest most about the way academic science behaves is the constant assault on our belongingness. There are many forces that try to tell you that you suck and your science is useless and you don’t really deserve to have a long and funded career doing science. The much discussed Imposter Syndrome arises from this and is accelerated by it. I like to fight back against that, and give you tools to understand that the criticisms are nonsense. One of these forces is that of journal Impact Factor and the struggle to get your manuscripts accepted in higher and higher JIF venues.

If you are anything like me you may have a journal or two that is seemingly interested in publishing the kind of work you do, but for some reason you juuuuuust miss the threshold for easy acceptance. Leading to frequent rejection. In my case it is invariably over perceived impact with a side helping of “lacks mechanism”. Now these just-miss kinds of journals have to be within the conceivable space to justify getting analytical about it. I’m not talking about stretching way above your usual paygrade. In our case we get things in this one particular journal occasionally. More importantly, there are other people who get stuff accepted that is not clearly different than ours on these key dimensions on which ours are rejected. So I am pretty confident it is a journal that should seriously consider our submissions (and to their credit our almost inevitably do go out for review).

This has been going on for quite some time and I have a pretty decent sample of our manuscripts that have been rejected at this journal, published elsewhere essentially unchanged (beyond the minor revisions type of detail) and have had time to accumulate the first three years of citations. This journal is seriously missing the JIF boat on many of our submissions. The best one beat their JIF by a factor of 4-5 at times and has settled into a sustained citation rate of about double theirs. It was published in a journal with a JIF about 2/3rd as high. I have numerous other examples of manuscripts rejected over “impact” grounds that at least met that journal’s JIF and in most cases ran 1.5-3x the JIF in the critical second and third calendar years after publication.

Fascinatingly, a couple of the articles that were accepted by this journal are kind of under-performing considering their conceits, our usual for the type of work etc.

The point of this axe grinding is to encourage you to take a similar quantitative look at your own work if you should happen to be feeling down in the dumps after another insult directed at you by the system. This is not for external bragging, nobody gives a crap about the behind-the-curtain reality of JIF, h-index and the like. You aren’t going to convince anyone that your work is better just because it outpoints the JIF of a journal it didn’t get published in. Editors at these journals are going to continue to wring their hands about their JIF, refuse to face the facts that their conceits about what “belongs” and “is high impact” in their journal are flawed and continue to reject your papers that would help their JIF at the same rate. It’s not about that.

This is about your internal dialogue and your Imposter Syndrome. If this helps, use it.

JAMA discourages pre-print deposition

April 25, 2019

An alert from the Twitters points us to the author guidelines for JAMA. It appears to be the instructions for all JAMA titles even though the below tweet refers specifically to JAMA Psychiatry.

The critical bit:

Public dissemination of manuscripts prior to, simultaneous with, or following submission to this journal, such as posting the manuscript on preprint servers or other repositories, is discouraged, and will be considered in the evaluation of manuscripts submitted for possible publication in this journal. The evaluation will involve making a determination of whether publication of the submitted manuscript will add meaningful new information to the medical literature or will be redundant with information already disseminated with the posting of the preprint. Authors should provide information about any preprint postings, including copies of the posted manuscript and a link to it, at the time of submission of the manuscript to this journal

JAMA is not keen on pre-prints. They threaten that they will not accept manuscripts for publication on the basis that it is now “redundant” with information “already disseminated”. The require that you send them ” Copies of all related or similar manuscripts and reports (ie, those containing substantially similar content or using the same or similar data) that have been previously published or posted, or are under consideration elsewhere must be provided at the time of manuscript submission “.

Good luck with that JAMA. I predict that they will fight the good fight for a little while but will eventually cave. As you know, I think that the NIH policy encouraging pre-prints was the watershed moment. The fight is over. It’s down to cleaning out pockets of doomed resistance. Nothing left but the shouting. Etc. NIH grant success is a huge driver of the behavior of USA biomedical researchers. Anything that is perceived as enhancing the chances of a grant being awarded is very likely to be adopted by majorities of applicants. This is particularly important to newer applicants because they have fewer pubs to rely upon and the need is urgent to show that they are making progress in their newly independent careers. So the noobs are establishing a pre-print habit/tradition for themselves that is likely to carry forward in their careers. It’s OVER. Preprints are here and will stay.

My prediction is that authors will start avoiding peer-reviewed publication venues that discourage (this JAMA policy is more like ‘prevent’) pre-print communication. The more prestigious journals can probably hold out for a little while. If authors perceive a potential career benefit by being accepted in a JAMA title that is higher than the value of pre-printing, fine, they may hesitate until they’ve gotten rejected. My bet is that on the main, we will evolve to a point where authors won’t put up with this. There are too may reasons, including establishment of scientific priority, that will push authors away from journals which oppose pre-prints.

Anyone else know of other publishers or journal titles that are aggressively opposing pre-prints?

Introduction versus Discussion

April 23, 2019

A twitter observation suggests that some people’s understanding of what goes in the Introduction to a paper is different from mine.

In my view, you are citing things in the Introduction to indicate what motivated you to do the study and to give some insight into why you are using these approaches. Anything that was published after you wrote the manuscript did not motivate you to conduct the study. So there is no reason to put a citation to this new work in the Introduction. Unless, of course, you do new experiments for a revision and can fairly say that they were motivated by the paper that was published after the original submission.

It’s slightly assy for a reviewer to demand that you cite a manuscript that was published after the version they are reviewing was submitted. Slightly. More than slightly if that is the major reason for asking for a revision. But if a reviewer is already suggesting that revisions are in order, it is no big deal IMO to see a suggestion you refer to and discuss a recently published work. Discuss. As in the Discussion. As in there may be fresh off the presses results that are helpful to the interpretation and contextualization of your new data.

These results, however, do not belong in the Introduction. That is reserved for the motivating context for doing the work in the first place.

Infuriating manuscripts

January 17, 2019

I asked what percentage of manuscripts that you receive to review make you angry that the authors dared to submit such trash. The response did surprise me, I must confess.

I feel as though my rate is definitely under 5%.

Suggest women as potential reviewers

December 12, 2018

A recent editorial in Neuropsychopharmacology by Chloe J. Jordan and the Editor in Chief, William A. Carlezon Jr. overviews the participation of scientists in the journals’ workings by gender. I was struck by Figure 5 because it is a call for immediate and simple action by all of you who are corresponding authors, and indeed any authors.

The upper two pie charts show that between 25% and 34% of the potential reviewer suggestions in the first half of 2018 were women. Interestingly, the suggestions for manuscripts from corresponding authors who are themselves women were only slightly more gender balanced than were the suggestions for manuscripts with male corresponding authors.

The upper two pie charts show that between 25% and 34% of the potential reviewer suggestions in the first half of 2018 were women. Interestingly, the suggestions for manuscripts from corresponding authors who are themselves women were only slightly more gender balanced than were the suggestions for manuscripts with male corresponding authors.

Do Better.

I have for several years now tried to remember to suggest equal numbers of male and female reviewers as a default and occasionally (gasp) can suggest more women than men. So just do it. Commit yourself to suggest at least as many female reviewers as you do male ones for each and every one of your manuscripts. Even if you have to pick a postdoc in a given lab.

I don’t know what to say about the lower pie charts. It says that women corresponding authors nominate female peers to exclude at twice the rate of male corresponding authors. It could be a positive in the sense that women are more likely to think of other women as peers, or potential reviewers of their papers. They would therefore perhaps suggest more female exclusions compared with a male author that doesn’t bring as many women to mind as relevant peers.

That’s about the most positive spin I can think of for that so I’m going with it.

Your Manuscript in Review: It is never an idle question

August 22, 2018

I was trained to respond to peer review of my submitted manuscripts as straight up as possible. By this I mean I was trained (and have further evolved in training postdocs) to take every comment as legitimate and meaningful while trying to avoid the natural tendency to view it as the work of an illegitimate hater. This does not mean one accepts every demand for a change or alters one’s interpretation in preference for that of a reviewer. It just means you take it seriously.

If the comment seems stupid (the answer is RIGHT THERE), you use this to see where you could restate the point again, reword your sentences or otherwise help out. If the interpretation is counter to yours, see where you can acknowledge the caveat. If the methods are unclear to the reviewer, modify your description to assist.

I may not always reach some sort of rebuttal Zen state of oneness with the reviewers. That I can admit. But this approach guides my response to manuscript review. It is unclear that it guides everyone’s behavior and there are some folks that like to do a lot of rebuttal and relatively less responding. Maybe this works, maybe it doesn’t but I want to address one particular type of response to review that pops up now and again.

It is the provision of an extensive / awesome response to some peer review point that may have been phrased as a question, without incorporating it into the revised manuscript. I’ve even seen this suboptimal approach extend to one or more paragraphs of (cited!) response language.

Hey, great! You answered my question. But here’s the thing. Other people are going to have the same question* when they read your paper. It was not an idle question for my own personal knowledge. I made a peer review comment or asked a peer review question because I thought this information should be in the eventual published paper.

So put that answer in there somewhere!

___

*As I have probably said repeatedly on this blog, it is best to try to treat each of the three reviewers of your paper (or grant) as 33.3% of all possible readers or reviewers. Instead of mentally dismissing them as that weird outlier crackpot**.

**this is a conclusion for which you have minimal direct evidence.

Journal Citation Metrics: Bringing the Distributions

July 3, 2018

The latest Journal Citation Reports has been released, updating us on the latest JIF for our favorite journals. New for this year is….

…..drumroll…….

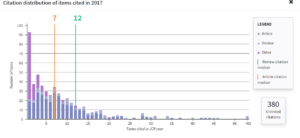

provision of the distribution of citations per cited item. At least for the 2017 year.

The data … represent citation activity in 2017 to items published in the journal in the prior two years.

This is awesome! Let’s drive right in (click to enlarge the graphs). The JIF, btw is 5.970.

Oh, now this IS a pretty distribution, is it not? No nasty review articles to muck it up and the “other” category (editorials?) is minimal. One glaring omission is that there doesn’t appear to be a bar for 0 citations, surely some articles are not cited. This makes interpretation of the article citation median (in this case 5) a bit tricky. (For one of the distributions that follows, I came up with the missing 0 citation articles constituting anywhere from 17 to 81 items. A big range.)

Still, the skew in the distribution is clear and familiar to anyone who has been around the JIF critic voices for any length of time. Rare highly-cited articles skew just about every JIF upward from what your mind things, i.e., that that is the median for the journal. Still, no biggie, right? 5 versus 5.970 is not all that meaningful. If your article in this journal from the past two years got 4-6 citations in 2017 you are doing great, right there in the middle.

Let’s check another Journal….

Ugly. Look at all those “Other” items. And the skew from the highly-cited items, including some reviews, is worse. JIF is 11.982 and the article citation median is 7. So among other things, many authors are going to feel like they impostered their way into this journal since a large part of the distribution is going to fall under the JIF. Don’t feel bad! Even if you got only 9-11 citations, you are above the median and with 6-8 you are right there in the hunt.

Not too horrible looking although clearly the review articles contribute a big skew, possibly even more than the second journal where the reviews are seemingly more evenly distributed in terms of citations. Now, I will admit I am a little surprised that reviews don’t do even better compared with primary review articles. It seems like they would get cited more than this (for both of these journals) to me. The article citation mean is 4 and the JIF is 6.544, making for a slightly greater range than the first one, if you are trying to bench race your citations against the “typical” for the journal.

The first takeaway message from these new distributions, viewed along with the JIF, is that you can get a much better idea of how your articles are fairing (in your favorite journals, these are just three) compared to the expected value for that journal. Sure, sure we all knew at some level that the distribution contributing to JIF was skewed and that median would be a better number to reflect the colloquial sense of typical, average performance for a journal.

The other takeaway is a bit more negative and self-indulgent. I do it so I’ll give you cover for the same.

The fun game is to take a look at the articles that you’ve had rejected at a given journal (particularly when rejection was on impact grounds) but subsequently published elsewhere. You can take your citations in the “JCR” (aka second) year of the two years after it was published and match that up with the citation distribution of the journal that originally rejected your work. In the past, if you met the JIF number, you could be satisfied they blew it and that your article indeed had impact worthy of their journal. Now you can take it a step farther because you can get a better idea of when your article beat the median. Even if your actual citations are below the JIF of the journal that rejected you, your article may have been one that would have boosted their JIF by beating the median.

Still with me, fellow axe-grinders?

Every editorial staff I’ve ever seen talk about journal business in earnest is concerned about raising the JIF. I don’t care how humble or soaring the baseline, they all want to improve. And they all want to beat some nearby competitors. Which means that if they have any sense at all, they are concerned about decreasing the uncited dogs and increasing the articles that will be cited in the JCR year above their JIF. Hopefully these staffs also understand that they should be beating their median citation year over year to improve. I’m not holding my breath on that one. But this new publication of distributions (and the associated chit chat around the campfire) may help with that.

Final snark.

I once heard someone concerned with JIF of a journal insist that they were not “systematically overlooking good papers” meaning, in context, those that would boost their JIF. The rationale for this was that the manuscripts they had rejected were subsequently published in journals with lower JIFs. This is a fundamental misunderstanding. Of course most articles rejected at one JIF level eventually get published down-market. Of course they do. This has nothing to do with the citations they eventually accumulate. And if anything, the slight downgrade in journal cachet might mean that the actual citations slightly under-represent what would have occurred at the higher JIF journal, had the manuscript been accepted there. If Editorial Boards are worried that they might be letting bigger fish get away, they need to look at the actual citations of their rejects, once published elsewhere. And, back to the story of the day, those actual citations need to be compared with the median for article citations rather than the JIF.

Self plagiarism

June 8, 2018

A journal has recently retracted an article for self-plagiarism:

Just going by the titles this may appear to be the case where review or theory material is published over and over in multiple venues.

I may have complained on the blog once or twice about people in my fields of interest that publish review after thinly updated review year after year.

I’ve seen one or two people use this strategy, in addition to a high rate of primary research articles, to blanket the world with their theoretical orientations.

I’ve seen a small cottage industry do the “more reviews than data articles” strategy for decades in an attempt to budge the needle on a therapeutic modality that shows promise but lacks full financial support from, eg NIH.

I still don’t believe “self-plagiarism” is a thing. To me plagiarism is stealing someone else’s ideas or work and passing them off as one’s own. When art critics see themes from prior work being perfected or included or echoed in the masterpiece, do they scream “plagiarism”? No. But if someone else does it, that is viewed as copying. And lesser. I see academic theoretical and even interpretive work in this vein*.

To my mind the publishing industry has a financial interest in this conflation because they are interested in novel contributions that will presumably garner attention and citations. Work that is duplicative may be seen as lesser because it divides up citation to the core ideas across multiple reviews. Given how the scientific publishing industry leeches off content providers, my sympathies are…..limited.

The complaint from within the house of science, I suspect, derives from a position of publishing fairness? That some dude shouldn’t benefit from constantly recycling the same arguments over and over? I’m sort of sympathetic to this.

But I think it is a mistake to give in to the slippery slope of letting the publishing industry establish this concept of “self-plagiarism”. The risk for normal science pubs that repeat methods are too high. The risks for “replication crisis” solutions are too high- after all, a substantial replication study would require duplicative Introductory and interpretive comment, would it not?

__

*although “copying” is perhaps unfair and inaccurate when it comes to the incremental building of scientific knowledge as a collaborative endeavor.

Citing Preprints

May 23, 2018

In my career I have cited many non-peer-reviewed sources within my academic papers. Off the top of my head this has included:

- Government reports

- NGO reports

- Longitudinal studies

- Newspaper items

- Magazine articles

- Television programs

- Personal communications

I am aware of at least one journal that suggests that “personal communications” should be formatted in the reference list just like any other reference, instead of the usual parenthetical comment.

It is much, much less common now but it was not that long ago that I would run into a citation of a meeting abstract with some frequency.

The entire point of citation in a scientific paper is to guide the reader to an item from which they can draw their own conclusions and satisfy their own curiosity. One expects, without having to spell it out each and every time, that a citation of a show on ABC has a certain quality to it that is readily interpreted by the reader. Interpreted as different from a primary research report or a news item in the Washington Post.

Many fellow scientists also make a big deal out of their ability to suss out the quality of primary research reports merely by the place in which it was published. Maybe even by the lab that published it.

And yet.

Despite all of this, I have seen more than one reviewer objection to citing a preprint item that has been published in bioRxiv.

As if it is somehow misleading the reader.

How can all these above mentioned things be true, be such an expectation of reader engagement that we barely even mention it but whooooOOOAAAA!

All of a sudden the citation of a preprint is somehow unbelievably confusing to the reader and shouldn’t be allowed.

I really love* the illogical minds of scientists at times.