NHLBI data shows grant percentile does not predict paper bibliometrics

February 20, 2014

A reader pointed me to this News Focus in Science which referred to Danthi et al, 2014.

Danthi N1, Wu CO, Shi P, Lauer M. Percentile ranking and citation impact of a large cohort of national heart, lung, and blood institute-funded cardiovascular r01 grants. Circ Res. 2014 Feb 14;114(4):600-6. doi: 10.1161/CIRCRESAHA.114.302656. Epub 2014 Jan 9.

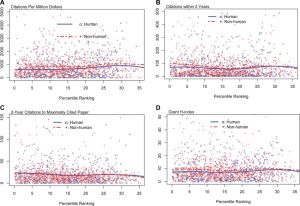

I think Figure 2 makes the point, even without knowing much about the particulars

and the last part of the Abstract makes it clear.

We found no association between percentile rankings and citation metrics; the absence of association persisted even after accounting for calendar time, grant duration, number of grants acknowledged per paper, number of authors per paper, early investigator status, human versus nonhuman focus, and institutional funding. An exploratory machine learning analysis suggested that grants with the best percentile rankings did yield more maximally cited papers.

The only thing surprising in all of this was a quote attributed to the senior author Michael Lauer in the News Focus piece.

“Peer review should be able to tell us what research projects will have the biggest impacts,” Lauer contends. “In fact, we explicitly tell scientists it’s one of the main criteria for review. But what we found is quite remarkable. Peer review is not predicting outcomes at all. And that’s quite disconcerting.”

Lauer is head of the Division of Cardiovascular Research at the NHLBI and has been there since 2007. Long enough to know what time it is. More than long enough.

The take home message is exceptionally clear. It is a message that most scientist who have stopped to think about it for half a second have already arrived upon.

Science is unpredictable.

Addendum: I should probably point out for those readers who are not familiar with the whole NIH Grant system that the major unknown here is the fate of unfunded projects. It could very well be the case that the ones that manage to win funding do not differ much but the ones that are kept from funding would have failed miserably, had they been funded. Obviously we can’t know this until the NIH decides to do a study in which they randomly pick up grants across the entire distribution of priority scores. If I was a betting man I’d have to lay even odds on the upper and lower halves of the score distribution 1) not differing vs 2) upper half does better in terms of paper metrics. I really don’t have a firm prediction, I could see it either way.

February 20, 2014 at 1:31 pm

I mean, there’s not even a sniff of a downtick toward the 35%ile, there, which really makes you wonder what the data would look like in a hypothetical universe in which every application submitted was funded. There must be a threshold at which impact starts to crash with increasing score, but where is it? Is it time to just be done with this nonsense and get a lotto machine?

…and wtf is going on with that shallow but consistent dip in 15%ile range for all those criteria?

LikeLike

February 20, 2014 at 3:00 pm

I’m shocked. This is my shocked face…………….

LikeLike

February 20, 2014 at 3:29 pm

This supports what I’ve thought for some time now: that study sections shouldn’t bother meeting face to face anymore, or even discussing applications. The NIH should simply solicit priority scores for grants, then take the top 50% based on the average priority score and RANDOMLY choose 20-30% of these non-triaged grants to fund. It would obviously not change the impact or productivity output while saving many dollars in the cost of conducting the face-to-face panels. It would also remove a significant portion of bias in funding patterns with particular individuals.

LikeLike

February 21, 2014 at 12:03 am

It may be that citation metrics are crap themselves and that they do not measure impact. Or it could be that people get their funding from whatever institute this data comes from but publish elsewhere, and metrics across disciplines are not comparable. It can also be that it takes longer to determine impact. There are many ways to explain this data and still believe in the current system. I think it’s broken, but this is no proof.

LikeLike

February 21, 2014 at 5:50 am

I’m very surprised by these stats too. It makes me wonder what would happen if they take into account to what extent the researchers did what they proposed in the grant versus if they diverged from their original ideas. I know in our lab some grant money is used for things that are pretty far from what was originally proposed, while it is still reported that this is what was done with said grant money.

LikeLike

February 21, 2014 at 5:52 am

I suppose we can’t rule out the possibility that program has simply gotten highly adept at picking out diamonds that have fallen in the rough during peer review. In which case those scatter points in the 30%s would not be representative of how most of the similarly-scored studies would have fared impact-wise had they too been funded. Not sure I find that possibility all that compelling an explanation, but it’s plausible..

LikeLiked by 1 person

February 21, 2014 at 6:25 am

A grant is not a contract, iBAM

LikeLike

February 21, 2014 at 6:38 am

I move that from now on NIH study sections assign perfect scores to all applications in the top 25% based on preliminary reviewer scores and that we don’t bother to meet or vote. The money and time saved should increase scientific productivity and allow more applications to be funded. Alternatively, just randomly fund 25% of all applications.

LikeLike

February 21, 2014 at 6:52 am

Yes Joe, PI types will love knowing the selection from 25% to the funded 12% was by PO decision, rather than study section decision.

LikeLike

February 21, 2014 at 6:57 am

DM, If the data indicate that after a certain point, the scores don’t matter, then why are we bothering to do it? Just so we can fund our buddies and enforce our biases?

LikeLike

February 21, 2014 at 8:04 am

The scores don’t matter for citations and paper count. This doesn’t mean that peer review rankings do not affect other measures of grant outcome.

LikeLike

February 21, 2014 at 8:17 am

JL “It may be that citation metrics are crap themselves and that they do not measure impact.”

True, and there’s no doubt we lean on citations primarily for lack of a better yardstick for impact. But regardless, citations are key to how reviewers assess an applicant’s standing in the field and the importance of the work they’re engaged in. So if citations turn out to be no predictor for true “significantly advancing human health!” impact, and they may well not be, we’re merely left with an alternative reason for why the cost of peer review is no longer worth its poor efficacy in identifying the best science to fund.

LikeLike

February 21, 2014 at 8:48 am

Would you send your grant to a study section where there was a 50% chance it’d be assigned to actual people and a 50% chance it’d be put on the dart board?

LikeLike

February 21, 2014 at 5:15 pm

Not sure how valuable this observation is, but my highest impact papers have all been about projects that were (at the time) unfunded.

Isn’t that normal procedure?: 1) Do cool project, 2) Use data from cool project to write grant proposal, 3) Publish on cool project, 4) Use grant money to do next cool something unrelated to proposal.

LikeLike

February 21, 2014 at 5:21 pm

@becca:

I would seriously take the dart board option. If there’s a 5% change of me getting funded by a dart, at least I know that I have a reasonably even chance of having funding if I submit 20 applications.

The way it is now, left to reviewers who by all accounts can’t pick a winning proposal out of their asses if it were on fire, gives me no assurance of anything.

LikeLike

February 24, 2014 at 4:37 pm

I’d want to see this relationship in a more field specific way — I feel like the relationships could be dampened by different structures of citations in different fields. Say, if there’s a field that has a lower overall citation rate, the power to detect the relationship might be lower with the amount of data. Would be interesting to see the relationships over a longer period of time, too.

LikeLike

February 25, 2014 at 1:45 pm

[…] (across fields, ‘big science’ groups are getting hammered. This is very short-sighted) NHLBI data shows grant percentile does not predict paper bibliometrics Stuart Schreiber on how H3 Biomedicine is poised for ‘radical change’ Supercomputer Beagle can […]

LikeLike

February 27, 2014 at 4:15 am

All it means is that the goofs doing heart and lung stuff don’t know how to review grants. Us neuro types are way better at this stuff, no doubt.

LikeLike