CHE digs out some excuses from NIH as to why they are doing so little about Ginther

January 13, 2014

As you know I am distinctly unimpressed with the NIH’s response to the Ginther report which identified a disparity in the success rate of African-American PIs when submitting grant applications to the NIH.

The NIH response (i.e., where they have placed their hard money investment in change) has been to blame pipeline issues. The efforts are directed at getting more African-American trainees into the pipeline and, somehow, training them better. The subtext here is twofold.

First, it argues that the problem is that the existing African-American PIs submitting to the NIH just kinda suck. They are deserving of lower success rates! Clearly. Otherwise, the NIH would not be looking in the direction of getting new ones. Right? Right.

Second, it argues that there is no actual bias in the review of applications. Nothing to see here. No reason to ask about review bias or anything. No reason to ask whether the system needs to be revamped, right now, to lead to better outcome.

A journalist has been poking around a bit. The most interesting bits involve Collins’ and Tabak’s initial response to Ginther and the current feigned-helplessness tack that is being followed.

From Paul Basken in the Chronicle of Higher Education:

Regarding the possibility of bias in its own handling of grant applications, the NIH has taken some initial steps, including giving its top leaders bias-awareness training. But a project promised by the NIH’s director, Francis S. Collins, to directly test for bias in the agency’s grant-evaluation systems has stalled, with officials stymied by the legal and scientific challenges of crafting such an experiment.

“The design of the studies has proven to be difficult,” said Richard K. Nakamura, director of the Center for Scientific Review, the NIH division that handles incoming grant applications.

Hmmm. “difficult”, eh? Unlike making scientific advances, hey, that stuff is easy. This, however, just stumps us.

Dr. Collins, in his immediate response to the Ginther study, promised to conduct pilot experiments in which NIH grant-review panels were given identical applications, one using existing protocols and another in which any possible clue to the applicant’s race—such as name or academic institution—had been removed.

“The well-described and insidious possibility of unconscious bias must be assessed,” Dr. Collins and his deputy, Lawrence A. Tabak, wrote at the time.

Oh yes, I remember this editorial distinctly. It seemed very well-intentioned. Good optics. Did we forget that the head of the NIH is a political appointment with all that that entails? I didn’t.

The NIH, however, is still working on the problem, Mr. Nakamura said. It hopes to soon begin taking applications from researchers willing to carry out such a study of possible biases in NIH grant approvals, and the NIH also recently gave Molly Carnes, a professor of medicine, psychiatry, and industrial and systems engineering at the University of Wisconsin at Madison, a grant to conduct her own investigation of the matter, Mr. Nakamura said.

The legal challenges include a requirement that applicants get a full airing of their submission, he said. The scientific challenges include figuring out ways to get an unvarnished assessment from a review panel whose members traditionally expect to know anyone qualified in the field, he said.

What a freaking joke. Applicants have to get a full airing and will have to opt-in, eh? Funny, I don’t recall ever being asked to opt-in to any of the non-traditional review mechanisms that the CSR uses. These include phone-only reviews, video-conference reviews and online chat-room reviews. Heck, they don’t even so much as disclose that this is what happened to your application! So the idea that it is a “legal” hurdle that is solved by applicants volunteering for their little test is clearly bogus.

Second, the notion that a pilot study would prevent “full airing” is nonsense. I see very few alternatives other than taking the same pool of applications and putting them through regular review as the control condition and then trying to do a bias-decreasing review as the experimental condition. The NIH is perfectly free to use the normal, control review as the official review. See? No difference in the “full airing”.

I totally agree it will be scientifically difficult to try to set up PI blind review but hey, since we already have so many geniuses calling for blinded review anyway…this is well worth the effort.

But “blind” review is not the only way to go here. How’s about simply mixing up the review panels a bit? Bring in a panel that is heavy in precisely those individuals who have struggled with lower success rates- based on PI characteristics, University characteristics, training characteristics, etc. See if that changes anything. Take a “normal” panel and provide them with extensive instruction on the Ginther data. Etc. Use your imagination people, this is not hard.

Disappointingly, the CHE piece contains not one single bit of investigation into the real question of interest. Why is this any different from any other area of perceived disparity between interests and study section outcome at the NIH? From topic domain to PI characteristics (sex and relative age) to University characteristics (like aggregate NIH funding, geography, Congressional district, University type/rank, etc) the NIH is full willing to use Program prerogative to redress the imbalance. They do so by funding grants out of order and, sometimes, by setting up funding mechanisms that limit who can compete for the grants.

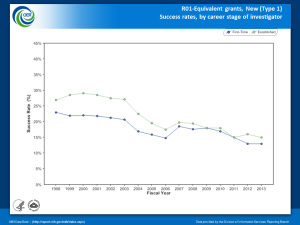

In the recent case of young/recently transitioned investigators they have trumpeted the disparity loudly, hamfistedly and brazenly “corrected” the study section disparity with special paylines and out of order pickups that amount to an affirmative action quota system [PDF].

In the recent case of young/recently transitioned investigators they have trumpeted the disparity loudly, hamfistedly and brazenly “corrected” the study section disparity with special paylines and out of order pickups that amount to an affirmative action quota system [PDF].

All with exceptionally poor descriptions of exactly why they need to do so, save “we’re eating out seed corn” and similar platitudes. All without any attempt to address the root problem of why study sections return poorer scores for early stage investigators. All without proving bias, describing the nature of the bias and without clearly demonstrating the feared outcome of any such bias.

“Eating our seed corn” is a nice catch phrase but it is essentially meaningless. Especially when there are always more freshly trained PHD scientist eager and ready to step up. Why would we care if a generation is “lost” to science? The existing greybeards can always be replaced by whatever fresh faces are immediately available, after all. And there was very little crying about the “lost” GenerationX scientists, remember. Actually, none, outside of GenerationX itself.

The point being, the NIH did not wait for overwhelming proof of nefarious bias. They just acted very directly to put a quota system in place. Although, as we’ve seen in recent data this has slipped a bit in the past two Fiscal Years, the point remains.

Why, you might ask yourself, are they not doing the same in response to Ginther?

January 13, 2014 at 11:21 am

My understanding was that Ginther directly controlled for factors such as number of publications, training background, etc., so the quality of the applicants was definitely not the reason for the bias.

LikeLike

January 13, 2014 at 11:51 am

If people bothered to read the full nitty gritty of the report it really makes it hard to sustain many of the usual excuses doesn’t it?

LikeLike

January 13, 2014 at 2:21 pm

I reckon at the very least they could try making the off-site review stage blind. Have the applications initially scored based purely on impact, approach, significance &c and then wait until study section to reveal names and allow productivity, environment and that other stuff to come into play.

But yeah, the question of why this particular example of inequality would req

LikeLike

January 13, 2014 at 2:23 pm

…require such strategies where other forms have been addressed directly is a good one. Is it simply the case that race-oriented affirmative action is seen as more politically incendiary, perhaps? I hope not, because that’s petty crap reason to treat it any differently than every other form of affirmative action.

LikeLike

January 13, 2014 at 2:33 pm

It is not going to be simple to de-identify NIH grants. they have to be written totally differently, as I’ve argued elsewhere.

it also gets one distracted off into trying to identify THE BIAS when the outcome may be a result of many biases of different types. Bias against the noobs isn’t just one thing either, btw.

Which is why I find this process so cynical and clearly designed to do nothing.

LikeLike

January 13, 2014 at 4:01 pm

Anyone who has ever reviewed grants knows that anonymous proposals will never work. First, especially in this tight funding environment, being a ‘nobody’ kills you. So if you are not a nobody, you will smartly make damn sure that your proposal is easily identified as coming from you.

LikeLike

January 13, 2014 at 7:31 pm

Feckin eedjets! pilot experiments in which NIH grant-review panels were given identical applications, one using existing protocols and another in which any possible clue to the applicant’s race—such as name or academic institution—had been removed.

Any dumbass can figure out who it is from the references and other intrinsic info’. Instead of redacting all that stuff, which just initiates a search by reviewers to find the originator, just modify it! Swap the fuckers and make it credible. This is a lot easier to accomplish at the junior level, if the individuals are unknown and names easily swappable. The proper experimental design is to take a grant and send it out again with 2 different names (one Caucasian, one African American) to different sections. If CSR can’t figure this basic shit out, is it any wonder they can’t get to the bottom of this problem?

LikeLike

January 14, 2014 at 3:22 am

I agree that it is disappointing that it has taken NIH so long to even organize the studies that Collins and Tabak had promised in the Science piece. The issue of blinding is challenging in this context as TOD notes. Being an unknown is a likely to be a substantial “risk factor”. The legal issues that Richard Nakamura is likely referring to relates to the use of review when the score is not intended to be used for making funding decisions. Suppose an application is reviewed twice and gets two substantially different scores. Which one should be used for decision making? However, in my opinion the larger issue that NIH is reluctant to deal with is the inherent uncertainty in peer review scores. If you review an identical application twice, how much would you expect the scores to differ? This is an important parameter to know for any test, but NIH appears never to have attempted to measure it. They may be concerned about the consequences of providing data that undermines the notion that a peer review score is an infinitely accurate and precise measurement of scientific merit despite the fact that this is absolutely not true.

LikeLike

January 14, 2014 at 5:15 am

Ola — some of this work was done in controlled settings, with made up CVs and made-up names. Names clearly identifiable as women or minorities consistently lead to worse evaluations for those CVs, even though the rest of the CV is identical.

The problem seems to be not intentional bias, but subconscious ideas of what sort of person is ‘appropriate’. How many times have you heard the phrase ‘a good fit’? As in: He/she isn’t a good fit. That’s subconscious bias in action. There is a lot of fascinating research in this area, much of it sponsored by NIH.

LikeLike

January 14, 2014 at 5:19 am

Hey Jeremy…

Interesting you should mention questions about uncertainty in review. I have been itching to do a human study evaluating different review methodologies, to determine which might be most accurate and consistent. I think NIH and any other agency that uses peer-review could save money and do a better job.

How about issuing an RFA, so I can apply?

LikeLike

January 14, 2014 at 6:56 am

Ola- yes. Although I have no problem coming up with overtly and almost cartoonishly Caucasian names, such as N. Robert Marley, PhD, Carl L. Hart, PhD and Winston Hubert McIntosh, MD, how do we know the African American names we pick will work with all reviewers?

JB- of course you are entirely correct that the NIH is deeply invested in pretending that review is perfect and invariant, resulting in precise merit distinctions. Embarrassing that an agency devoted to the scientific method is so fearful of finding out facts about its own behavior but there we have it.

LikeLike

January 14, 2014 at 10:21 am

TOD: It would be interesting if NIH or NSF would do this. I am outside now (in Pittsburgh) but I can certainly ask.

LikeLike

January 14, 2014 at 7:03 pm

“Is it simply the case that race-oriented affirmative action is seen as more politically incendiary, perhaps?”

Well, yeah. Also, when the budget tightens people’s interest in playing fairly tends to dwindle.

“cartoonishly Caucasian names, such as N. Robert Marley, PhD, Carl L. Hart, PhD and Winston Hubert McIntosh, MD”

Thanks for making me smile in the midst of a depressing discussion.

I’m baffled that NIH doesn’t see this as an easy win for them to get. The fraction of grants that we are talking about is relatively small, so it seems the ICs could solve the disparity problem themselves with a tiny bit of selectivity, if they had the prerogative. Therefore the inescapable conclusion is that Collins just doesn’t really care about this.

LikeLike

January 15, 2014 at 7:49 am

The NIH can give 0 fucks about the issue because they know every other field available to their AA investigators is probably just as biased, if not more so, they just don’t have percentages to put on it.

In conclusion, try being less black. Unless they need you for a ‘diversity’ initiative. And by all means we are supposed to get out there and recruit more AAs *lambs* to bring in to academia *slaughter*

LikeLike

January 15, 2014 at 8:59 am

I’m baffled that NIH doesn’t see this as an easy win for them to get. The fraction of grants that we are talking about is relatively small, so it seems the ICs could solve the disparity problem themselves with a tiny bit of selectivity, if they had the prerogative.

Exactly. There are so few African-American PIs submitting applications that we’re talking mere handfuls of grants picked up to even out the success rates. It could be so far under the radar (Collins, addressing the IC Directors one on one as he happens across them. “Fix this, ‘kay?” ) nobody would even know what was going on. And thereafter they could say “gee, Ginther must have been a blip, things are much better now”. They are being strategically stupid on this one. IMNSHO.

LikeLike

January 15, 2014 at 10:04 am

If you look at the data in the Ginther report, the biggest difference for African-American applicants is the percentage of “not discussed” applications. For African-Americans, 691/1149 =60.0% of the applications were not discussed whereas for Whites, 23,437/58,124 =40% were not discussed (see supplementary material to the paper). The actual funding curves (funding probability as a function of priority score) are quite similar (Supplementary Figure S1). If applications are not discussed, program has very little ability to make a case for funding, even if this were to be deemed good policy.

LikeLike

January 15, 2014 at 11:36 am

While that may be true, JB, there are plenty of applications available for pickup, true? As in, far more than would be required to make up the aggregate success rate discrepancy?

See, you are falling right into the Privilege-Thinking that we cannot possible countenance any redress of discrimination that, gasp, puts the previously UR group *above* the well represented groups even by the smallest smidge. But looking at Supplementary Fig1 from Gither, we note that there are some 15% of the African-American PI’s grants scoring in the 175 bin (old scoring method, youngsters) that were not funded. Some 55% of all ethnic/racial category grants in the next higher scoring bin were funded. So if Program picks up more of the better scoring applications from African American PIs at the expense of the worse scoring applications of White PIs….MERIT!

Keep in mind that the African American PI application number is only 2% of the White applications. So if we were to follow my suggestion it works like this:

Take the 175 scoring bin in which about 88% of white PIs and 85% of AA PIs were successful. Take a round number of 1,000 apps in that scoring bin and you get a 980/20 PI ratio White/AA. In that bin we’d need 3 more apps funded to get to 100%. In the next bin (200 score), about 56% of White PI apps were funded so taking three from this bin and awarding three more AA PI awards in the next better scoring bin would plunge the White PI award probability from 56% to 55.7%. Whoa, belt up cowboy.

Moving down the curve with the same logic, we find in the 200 score bin that there are about 9 AA PI applications needed to put the 200 score bin to 100%. looking down to the next worse scoring bin (225) and pulling these 9 apps from white PIs we end up changing the award probability for these apps from 22% to ..wait for it….. 20.8%.

And so on.

Another way to look at it is to take JBs triage numbers from above. To move to 40% triage rate for the AA PI applications, we need to shift 20%, or about 230 applications, into the discussed pile. This represents a whopping 0.4% of the White PI apps being shifted onto the triage pile to keep the numbers discussed the same.

These are entirely trivial numbers in terms of the “hit” to the chances of White PIs and yet you could easily equalize the success rate or award probability for African-American PIs. It is even more astounding that this could be done by picking up African American PI applications that scored better than the White PI applications that would go unfunded to make up the difference.

Tell me how this is not a no-brainer?

LikeLike